Ran into an interesting issue with my Routerboard CRS226-24G-2S+ “Cloud Router Switch” which is basically a smart layer 3 capable switch running Mikrotik’s RouterOS.

Whilst it’s specs mean it’s intended for switching rather than routing, given it has the full Mikrotik RouterOS on it it’s entirely possible to drop out a port from the switching hardware and use it to route traffic, in my case, between the LAN and WAN connections.

Routerboard’s website rate it’s routing capabilities as between 95.9 and 279 Mbits, in my own iperf tests before putting it into action I was able to do around 200Mbits routing. With only 40/10 Mbits WAN performance, this would work fine for my needs until we finally get UFB (fibre-to-the-home) in 2017.

However between this test and putting it into production, it’s ended up with a lot more firewall rules including NAT and when doing some work on the switch, I noticed that the CPU was often hitting the 100% threshold – which is never good for your networking hardware.

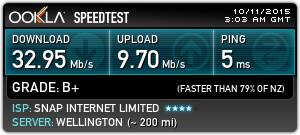

I wondered how much impact that maxed out CPU could be having on my WAN connection, so I used the very non-scientific Ookla Speedtest with the CRS doing my routing:

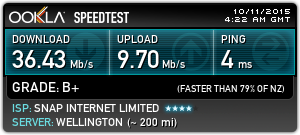

After stripping all the routing work from the CRS and moving it to a small Routerboard 750 ethernet router, I’ve gained a few additional Mbits of performance:

The CRS and the Routerboard 750 both feature a MIPS 24Kc 400Mhz CPU, so there’s no effective difference between the devices, in fact the switch is arguably faster as it’s a newer generation chip and has twice the memory, yet it performs worse.

The CPU usage that was formerly pegging at 100% on the CRS dropped to around 30% on the 750 when running these tests, so there clearly something going on in the CRS which is giving it a handicap.

The overhead of switching should be minimal in theory since it’s handled by dedicated hardware, however I wonder if there’s something weird like the main CPU having to give up time to handle events/operations for the switching hardware.

So yeah, a bit annoying – it’s still an awesome managed switch, but it would be nice if they dropped the (terrible) “Cloud Router Switch” name and just sell it for what it is – a damn nice layer 3 capable managed switch, but not as a router (unless they give it some more CPU so it can get the job done as well!).

For now the dedicated 750 as the router will keep me covered, although it will cap out at 100Mbits, both in terms of wire speed and routing capabilities so I may need to get a higher specced router come UFB time.