The previous owners of our house had left us with a reasonably comprehensive alarm system wired throughout the house, however like many alarm systems currently in homes, it required an analogue phone line to be able to call back to any kind of monitoring service.

To upgrade the alarm to an IP module via the monitoring company would be at least $500 in parts and seemed to consist of hooking the phone line to essentially a VoIP ATA adaptor which can phone home to their service.

As a home owner I want it internet connected so I can do self-monitoring, give me the ability to control remotely and to integrate it with IP-based camera systems. Most of the conventional alarm companies seem to offer none of things, or only very expensive sub-standard solutions.

To make things worse, their monitoring services are also pretty poor. Most of the companies I spoke to would receive an alarm, then call me to tell me about it/check with me and only then send someone out to investigate. The existing alarm company the previous owner was using didn’t even offer a callout security service!

Spark (NZ incumbent telco) recently brought out a consumer product called Morepork (as seen on stuff!) which looks attractive for your average non-techie consumer, but I’m not particularly keen to tie myself to Spark’s platform and it is very expensive, especially when considering I have to discard an existing functional system and start from scratch. There’s also some design weaknesses like the cameras being mains dependent, which I don’t consider acceptable given how easy it is to cut power to a house.

So I decided that I’d like to get my existing alarm IP connected, but importantly, I wanted to retain complete control over the process of generating an alert and delivering it to my phone so that it’s as fast as possible and also, as reliable as possible.

Not only did I want to avoid the human factor, but I’m also wary of the proprietary technologies used by most of the alarm companies off-the-shelf solutions. I have some strong doubts about the security of a number of offers, not to mention life span (oh sorry that alarm is EOL, no new mobile app for you) and the level of customisation/integration offered (oh you want to link your alarm with your camera motion detection? Sorry, we don’t support that).

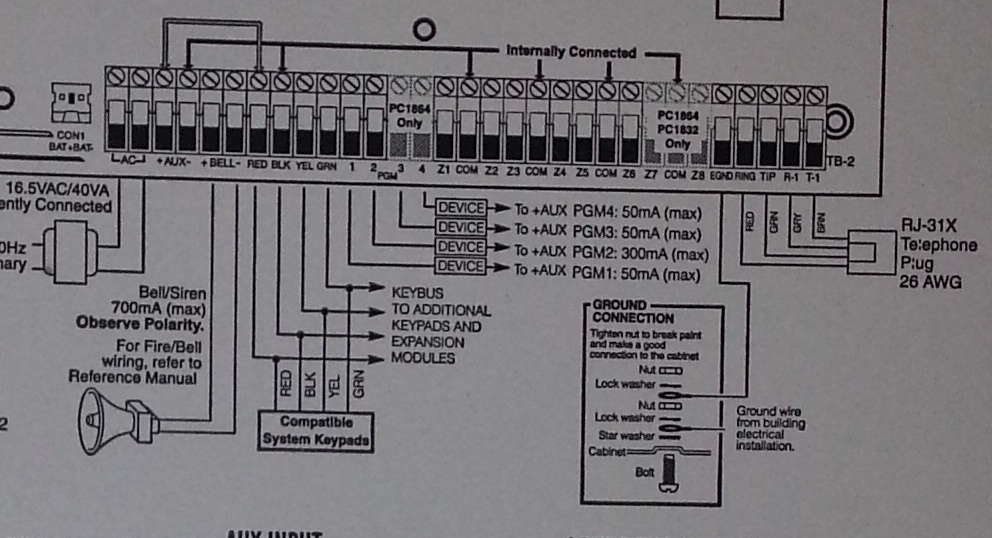

I did some research on my alarm system and found it’s one of the DSC PowerSeries range which is a large Canadian company operating globally. The good thing about them being a large global player is that there’s a heap of reference material about their products online.

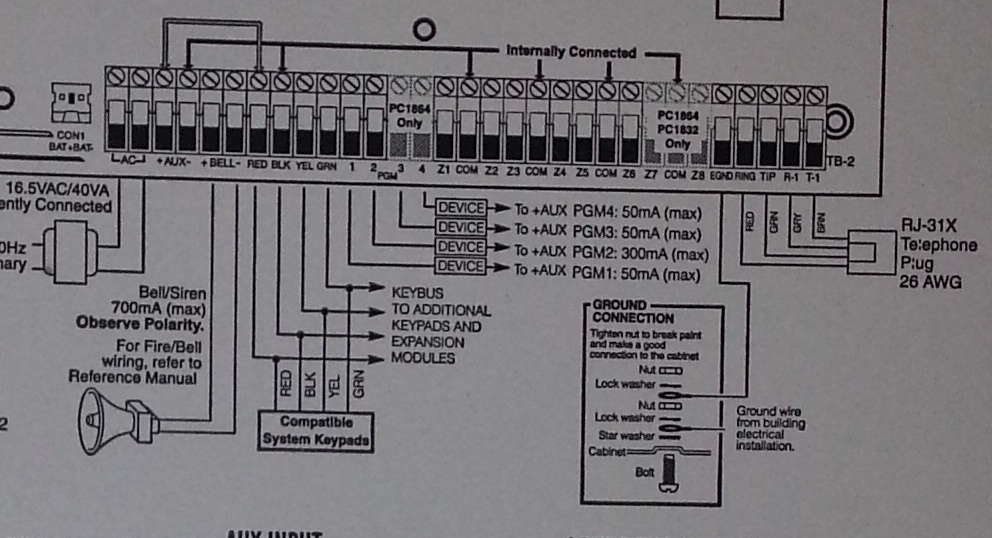

With a quick search I was able to find user guides, installer guides, programming guides and more. They also include a full wiring diagram inside the alarm control centre which is exceptionally useful, since it essentially explains how you can connect any kind of sensors yourself which can save a whole heap of money compared to paying for an alarm company to do the installation.

I wish all my electronic devices came with documentation this detailed.

The other great thing about this alarm is that since DSC is so massive, there’s an ecosystem of third party vendors offering components for it. Searching for third party IP modules, I ran into this article where the author purchased an IP module from a company known as Envisalink and used it’s third party API to write custom code to get alarm events and issue commands.

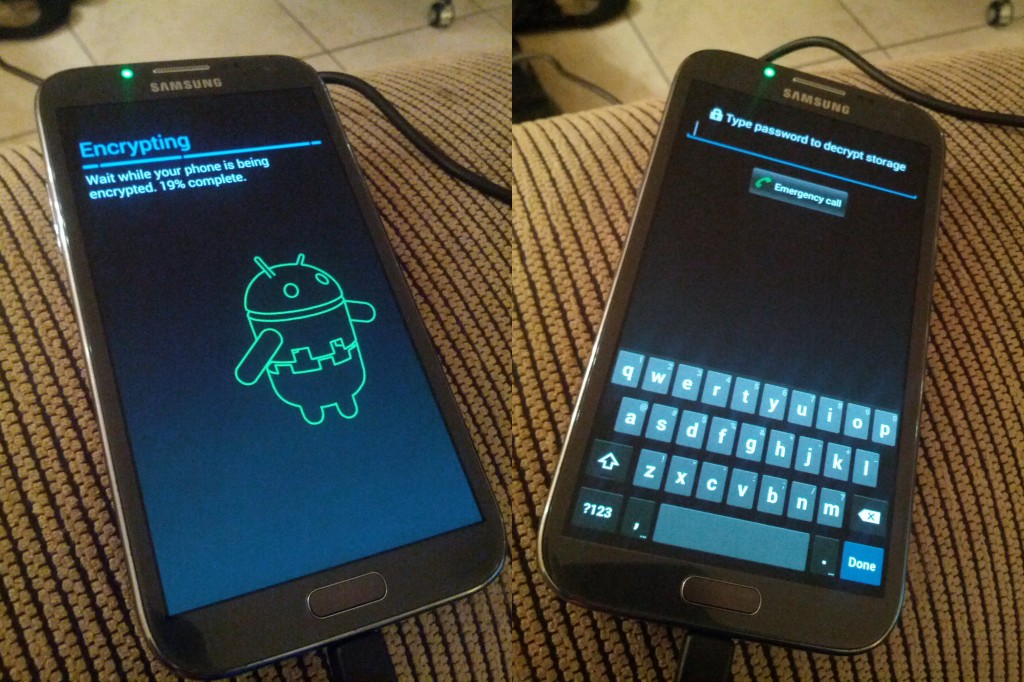

A third party API sounded perfect, so I purchased the EnvisaLink EVL-4 for $239 NZD delivered and did the installation myself. In theory the installation is easy, just a case of powering down the alarm (not touching any 240V hard wired mains in the process) and connecting it via the 4 wire keypad bus.

In my case it ended up being a bit more complex since the previous owner had helpfully never given me any of the master/installer alarm codes, so I ended up doing a factory reset of the unit and re-programming it from scratch (which means all the sensors, etc) which takes about a day to figure out and do the first time. The plus side is that this gave me complete control over the unit and I was able to do things like deprogram the old alarm company’s phone number to stop repeat failed callout attempts.

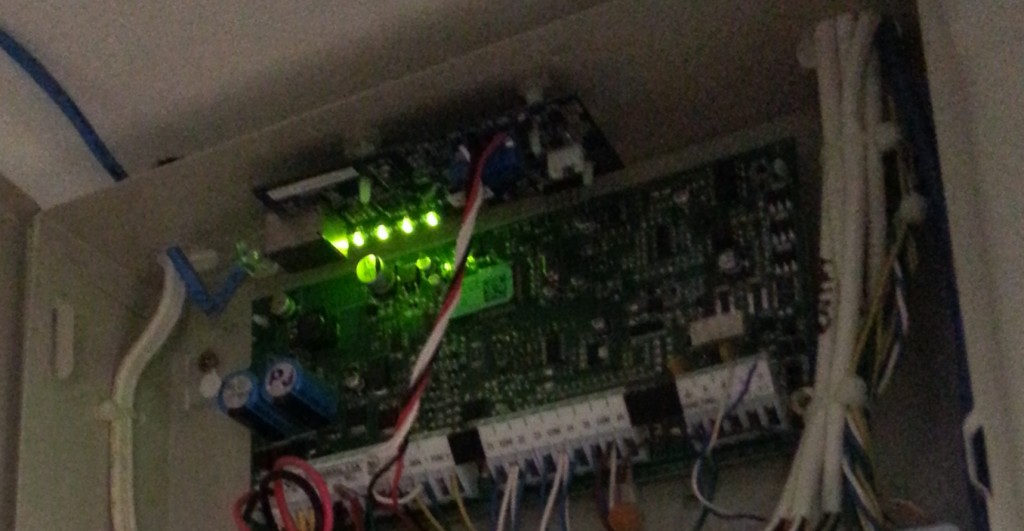

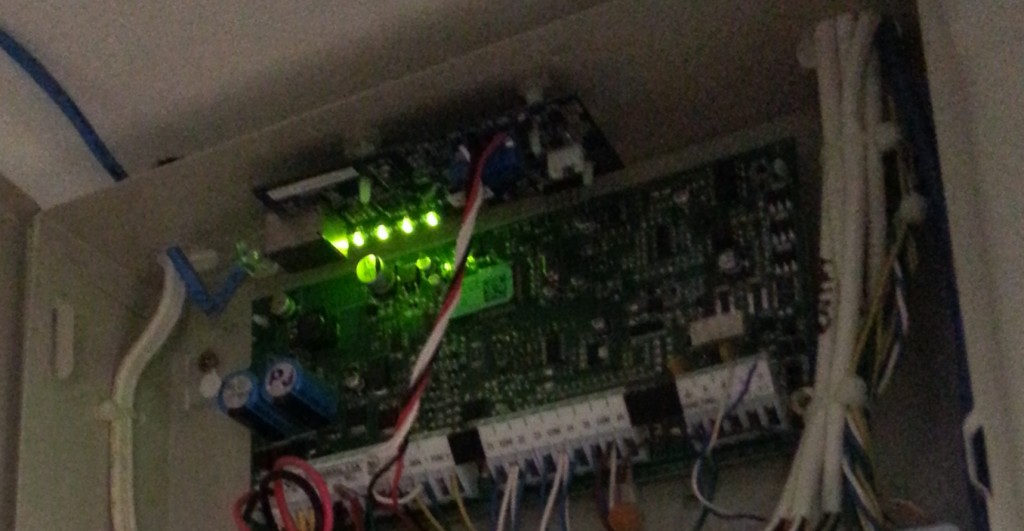

Once connected, the EnvisaLink unit was remarkably hassle free to setup – it grabbed a DHCP lease, connected to the internet and phoned home to the vendor’s free monitoring service.

EnvisaLink unit installed at the top above the alarm control circuit. A++ for LED ricing guys!

The EnvisaLink hardware is a great little unit and the third party programmer’s interface is reasonably well documented and works without too much grief. Unfortunately the rest of the experience of the company selling it isn’t particularly good. Specifically:

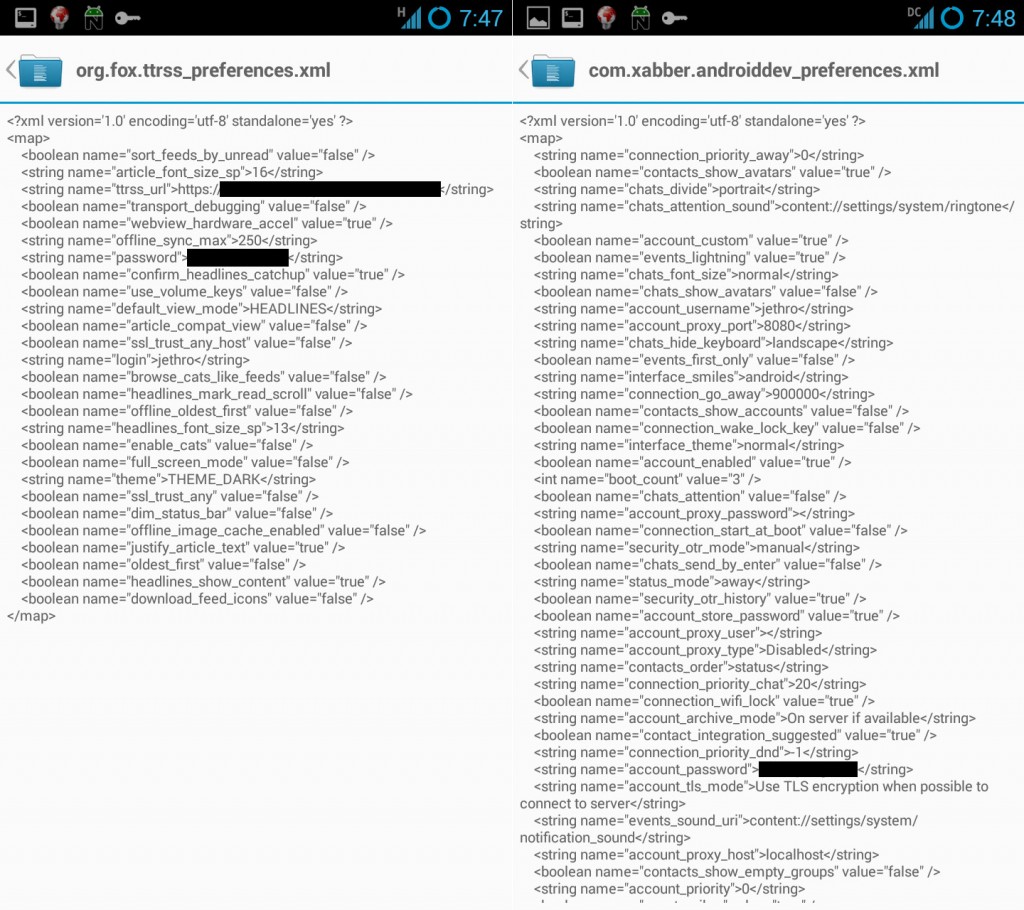

- Their website places the order by emailing their accounts mailbox. How do I know? Because they printed the email including my credit card number in full and sent it as the packing slip on it’s journey across the world. Great PCI compliance guys!

- They show the product as working with Android, iPhone and Blackberry. They carefully avoid saying it has native apps, they actually mean it has a “smart phone” optimized version, which is as terrible as it sounds.

- I can’t enable alerts on their service since their signup process keeps sending my email a blank validation code. So I had an alarm that couldn’t alarm me via their service.

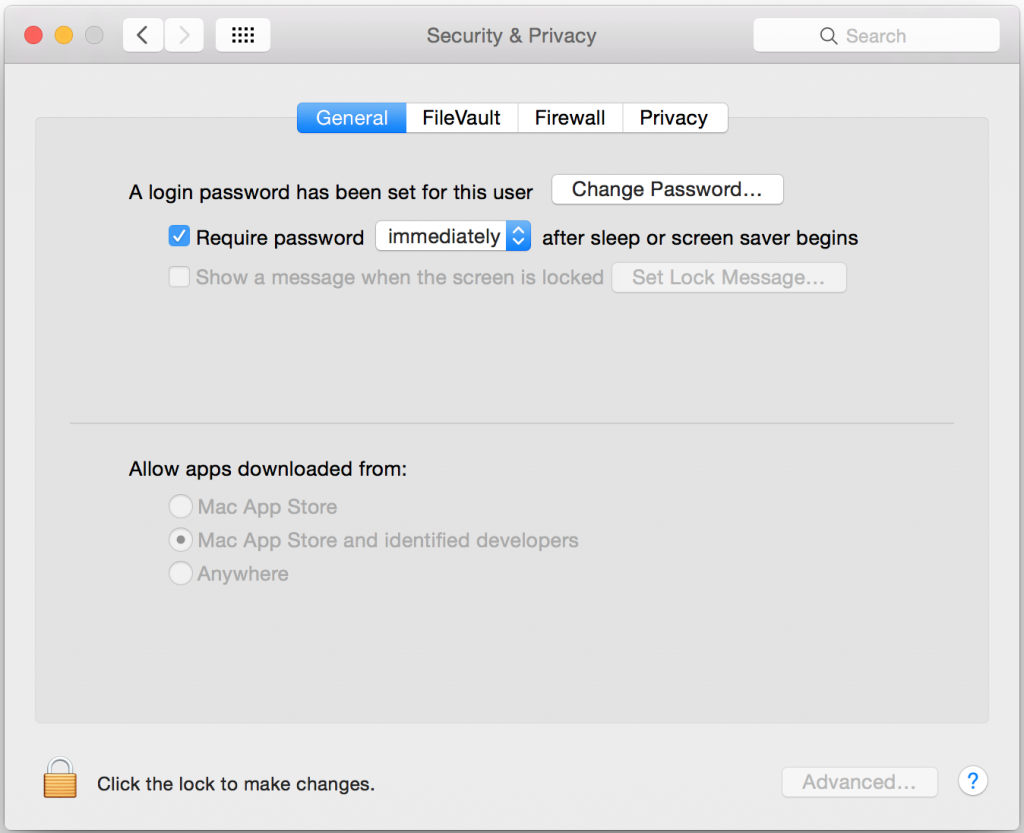

- No 2FA on logging into the alarm website, so you could brute force login and then disable the alarm remotely… or set it off if you just want to annoy the occupants.

I haven’t dug into the communications between the unit and it’s vendor, I sure hope it’s SSL/TLS secured and doesn’t have the ability to remotely exploit it and upgrade it, but I’m not going to chance it. Even if they’ve properly encrypted and secured comms between the unit and their servers, the security is limited to the best practices of the company and software which already look disturbingly weak.

Thankfully my requirements for the module is purely it’s third party API so I can integrate with my own systems, so I can ignore all these issues and put it on it’s own little isolated VLAN where it can’t cause any trouble and talk to anything but my server.

So having sorted out the hardware and gotten the alarm onto the network, I now needed some software that would at least meet the basic alerting requirements I have.

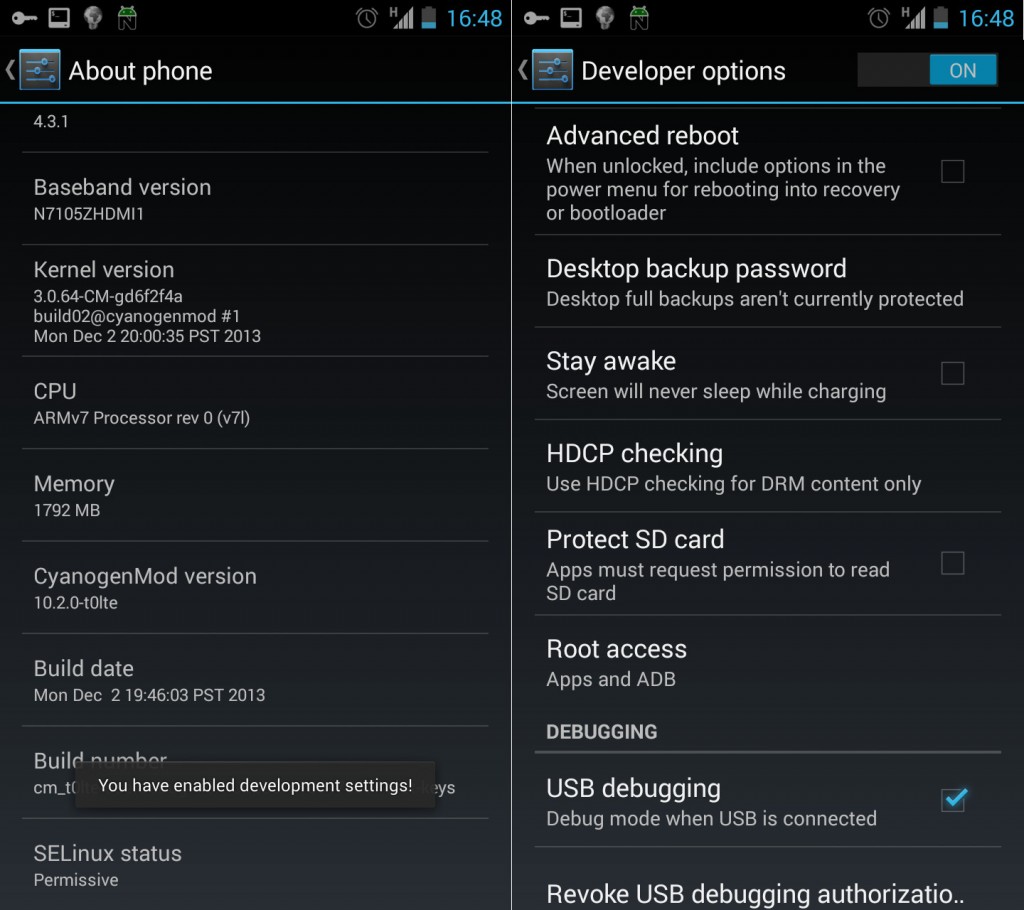

There’s an existing comprehensive Java/Android-based product (plainly labeled as “DSC Security Server”) which looks very configurable, but I specifically wanted something open source to make sure the alarm integration remained maintainable long term and to use Google Push Notifications for instant alerting on both Android (which supports long running background processes) and iOS (which does not – hence you must use push notifications via APNS).

I ended up taking advantage of some existing public code for handling the various commands and error responses from the Envisalink/DSC alarm combination but reworked it a bit so I now have a module system that consists of “alarm integrators” exchanging information/events with the alarm system and “alarm consumers” which decide what to do with the events generated. These all communicate via a simple beanstalk queue.

This design gives ultimate simplicity – each program is not much more than a small script and there’s a standard documented format for anyone whom wants to add support for other alarm integrators or alarm consumers in future. I wanted it kept simple, making it the sort of thing you could dump onto a Raspberry Pi and have anyone with basic scripting skills be able to debug and adjust it.

I’ve assembled these programs into an open source package I’m calling “HowAlarming”“, hopefully it might be useful for anyone in future with the same alarm system or wanting a foundation for building their own software for other alarms (or even their own alarms).

The simplest solution to get alerts from the system would be by sending SMS using one of the many different web-based SMS services, but I wanted something I can extend to support receiving images from the surveillance system in future and maybe also sending commands back.

Hence I’ve written a companion Android app which receives messages from HowAlarming via push notifications and maintains an event log and the current state of the alarm.

UX doens’t get much better than this.

It’s pretty basic, but it offers the MVP that I require. Took about a day to hack together not having done any Android or Java before, thankfully Android Studio makes the process pretty easy with lots of hand holding and easy integration with the simulators and native devices.

TBD if I can hack something together in a day not having done any native app development before that’s better than many of the offerings from the alarm companies currently around, they need to be asking themselves some hard questions. At the very least, they should get someone to write some apps that can pull their customer’s alarm state from their current phone-home infrastructure – there’s probably good money to be made giving existing customers on non-IP era alarms upgrades given the number of installations out there.

So far my solution is working well for me. It’s not without it’s potential problems, for example alarm communications are now at the mercy of a power/internet outage whereas previously as long as the phone line was intact, it could call out. However this is easily fixed with my UPS and 3G failover modem – the 3G actually makes it better than previously.

The other potential issue is that I don’t know what insurance would classify this nature of self-monitoring as. I have mine declared as “un-monitored” to avoid any complications, but if your insurance conditions require monitoring I’m unsure if a home-grown solution would meet those requirements (even if it is better than 90% of the alarm companies). So do your research and check your contracts & terms.