I’ve recently been working to migrate my personal infrastructure from a very conventional and ageing 8 year old colocation server to a new cloud-based approach.

As part of this migration I’m simplifying what I have down to the fewest possible services and offloading a number of them to best-of-breed cloud SaaS providers.

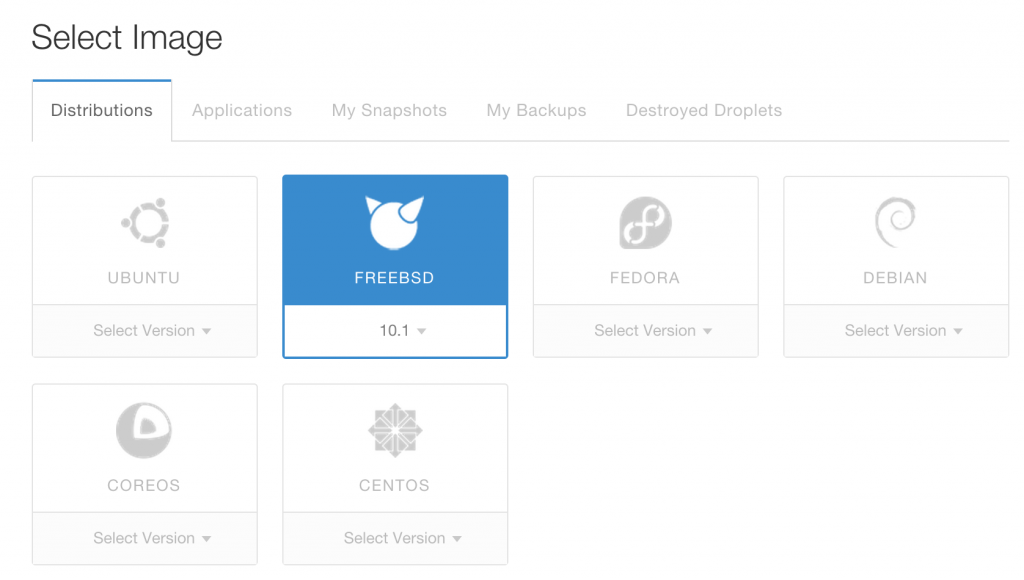

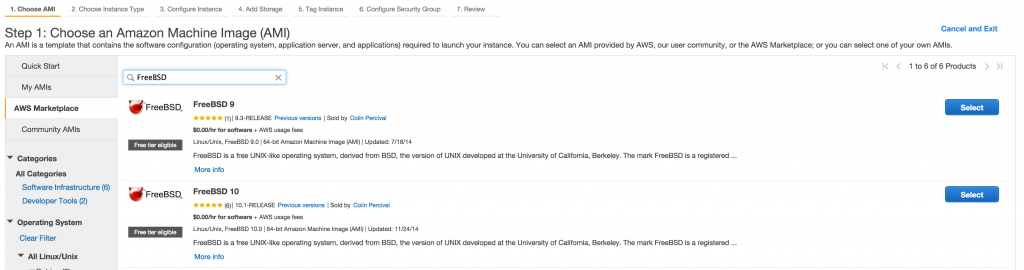

Of course I’m still going to have a few servers for running various applications where it makes the most sense, but ideally it will only be a handful of small virtual machines and a bunch of development machines that I can spin up on demand using cloud providers like AWS or Digital Ocean, only paying for what I use.

The Puppet Master Problem

To make this manageable I needed to use a configuration management system such as Puppet to allow the whole build process of new servers to be automated (and fast!). But running Puppet goes against my plan of as-simple-as-possible as it means running another server (the Puppet master). I could have gone for something like Ansible, but I dislike the agent-less approach and prefer to have a proper agent and being able to build boxes automatically such as when using autoscaling.

So I decided to use Puppet masterless. It’s completely possible to run Puppet against local manifest files and have it apply them, but there’s the annoying issue of how to get Puppet manifests to servers in the first place…. That tends to be left as an exercise to the reader and there’s various collections of hacks floating around on the web and major organisations seem to grow their own homespun tooling to address it.

Just getting a well functioning Puppet masterless setup took far longer than desired and it seems silly given that everyone doing Puppet masterless is going to have to do the same steps over and over again.

User-data is another case of stupidity with every organisation writing their own variation of what is basically the same thing – some lines of bash to get a newly launched Linux instance from nothingness to running Puppet and applying the manifests for that organisation. There’s got to be a better way.

The blessing and challenges of r10k

It gets even more complex when you take the use of r10k into account. r10k is a Puppet workflow solution that makes it easy to include various upstream Puppet modules and pin them to specific versions. It supports branches, so you can do clever things like tell one server to apply a specific new branch to test a change you’ve made before rolling it out to all your servers. In short, it’s fantastic and if you’re not using it with Puppet… you should be.

However using r10k does mean you need access to all the git repositories that are being included in your Puppetfile. This is generally dealt with by having the Puppet master run r10k and download all the git repos using a deployer key that grants it access to the repositories.

But this doesn’t work so well when you have to setup deployer access keys for every machine to be able to read every one of your git repositories. And if a machine is ever compromised, it needs to be changed for every repo and every server again which is hardly ideal.

r10k’s approach of allowing you to assemble various third party Puppet modules into a (hopefully) coherent collection of manifests is very powerful – grab modules from the Puppet forge, from Github or from some other third party, r10k doesn’t care it makes it all work.

But this has the major failing of essentially limiting your security to the trustworthyness of all the third parties you select.

In some cases the author is relatively unknown and could suddenly decide to start including malicious content, or in other cases the security of the platform providing the modules is at risk (eg Puppetforge doesn’t require any two-factor auth for module authors) and a malicious attacker could attack the platform in order to compromise thousands of machines.

Some organisations fix this by still using r10k but always forking any third party modules before using them, but this has the downside of increased manual overhead to regularly check for new updates to the forked repos and pulling them down. It’s worth it for a big enterprise, but not worth the hassle for my few personal systems.

The other issue aside from security is that if any one of these third party repos ever fails to download (eg repo was deleted), your server would fail to build. Nobody wants to find that someone chose to delete the GitHub repo you rely on just minutes before your production host autoscaled and failed to startup. :-(

Pupistry – the solution?

I wanted to fix the lack of a consistent robust approach to doing masterless Puppet and provide a good way to allow r10k to be used with masterless Puppet and so in my limited spare time over the past month I’ve been working on Pupistry. (Pupistry? puppet + artistry == Pupistry! Hopefully my solution is better than my naming “genius”…)

Pupistry is a solution for implementing reliable and secure masterless puppet deployments by taking Puppet modules assembled by r10k and generating compressed and signed archives for distribution to the masterless servers.

Pupistry builds on the functionality offered by the r10k workflow but rather than requiring the implementing of site-specific custom bootstrap and custom workflow mechanisms, Pupistry executes r10k, assembles the combined modules and then generates a compressed artifact file. It then optionally signs the artifact with GPG and finally uploads it into an Amazon S3 bucket along with a manifest file.

The masterless Puppet machines then runs Pupistry which checks for a new version of the manifest file. If there is, it downloads the new artifact and does an optional GPG validation before applying it and running Puppet. Pupistry ships with a daemon which means you can get the same convenience of a standard Puppet master & agent setup and don’t need dodgy cronjobs everywhere.

To make life even easier, Pupistry will even spit out bootstrap files for your platform which sets up each server from scratch to install, configure and run Pupistry, so you don’t need to write line after line of poorly tested bash code to get your machines online.

It’s also FAST. It can check for a new manifest in under a second, much faster than Puppet master or r10k being run directly on the masterless server.

Because Pupistry is artifact based, you can be sure your servers will always build since all the Puppetcode is packaged up which is great for autoscaling – although you still want to use a tool like Packer to create an OS image with Pupistry pre-loaded to remove dependency and risk of Rubygems or a newer version of Pupistry failing.

Try it!

https://github.com/jethrocarr/pupistry

If this sounds up your street, please take a look at the documentation on the Github page above and also the introduction tutorial I’ve written on this blog to see what Pupistry can do and how to get started with it.

Pupistry is naturally brand new and at MVP stage, so if you find bugs please file an issue in the tracker. It’s also worth checking the tracker for any other known issues with Pupistry before getting started with it in production (because you’re racing to put this brand new unproven app into production right?).

Pull requests for improved documentation, bug fixes or new features are always welcome, as is beer. :-)

I intend to keep developing this for myself as it solves my masterless Puppet needs really nicely, but I’d love to see it become a more popular solution that others are using instead of spinning some home grown weirdness again and again.

I’ve put some time into making it easy to use (I hope) and also written bootstrap scripts for most popular Linux distributions and FreeBSD, but I’d love feedback good & bad. If you’re using Pupistry and love it, let me know! If you tried Pupistry but it had some limitation/issue that prevented you from adopting it, let me know what it was, I might be able to help. Better yet, if you find a blocker to using it, fix it and send me a pull request. :-)