I’ve used Awstats for years as my website statistics/reporting program of choice – it’s trivial to setup, reliable and works with Apache log files and requires no modification to the website or usage of remote tools (like with Google Analytics).

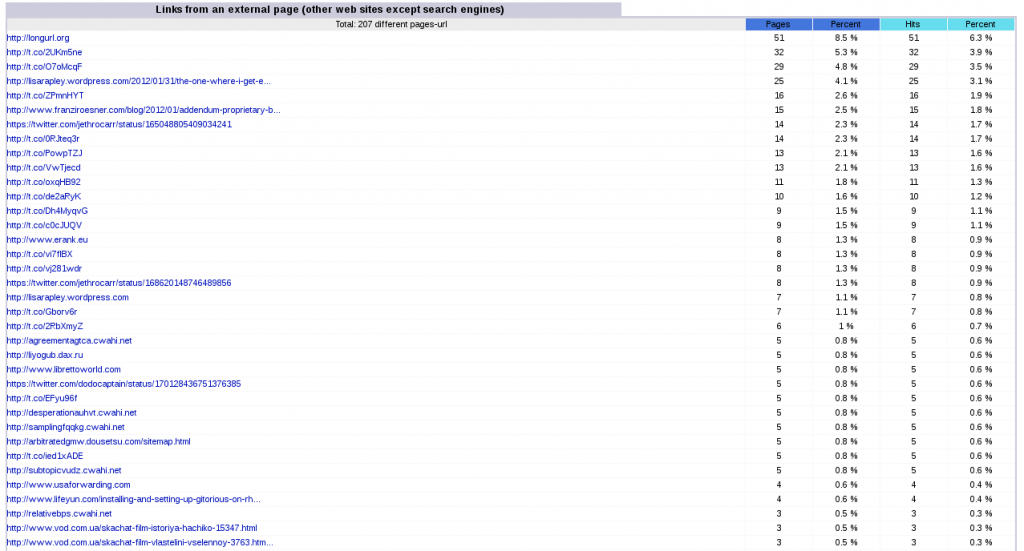

One of the handy features is the “Links from an external page” display, which is a great way of finding out where sudden bursts of hits are coming from, such as news posts mentioning your website or other bloggers linking back.

Sadly over the past couple of years it’s getting less useful thanks to the horrible wonder that is URL shortening.

URL shorteners have always been a controversial service – whilst they can be a useful way of making some of the internet’s more horrible website URLS usable, they cause a number of long term issues:

- Centralisation – The internet works best when decentralised, but URL shortening makes a large number of links dependent on a few particular organisations who may or not be around in the future. There’s already been a number of link shortening companies who have closed down killing large numbers of links and there will undoubtedly be more in the future.

- Link Hiding – Short URLs are a great way to send someone a link and have them open it without realising what content they’re actually about to open. It could be as innocent as a prank for a friend or as bad as malicious malware or scamming websites.

- Performance – It takes an extra DNS query (or several) to lookup the short URL servers before the actual destination can be looked up. This sounds like a minor issue, but it can add up when on high latency connections (eg mobile) or when connecting to international content on NZ’s wonderful internet and can add up to a number of seconds sometimes.

- Privacy – a third party can collate large amounts of information about an individual’s browsing history if they have a popular enough URL shortening service.

Of course URL shortening isn’t entirely evil, there’s a few valid use cases where they are acceptable or at least forgivable:

- Printed materials with URLs on them for manual entry. Nobody likes typing more than they need to, that’s understandable.

- Quickly sending temporary links to people via IM or email where the full URL breaks due to the client application’s inability to phrase the URL correctly.

Anything other than the above is inexcusable, computers are great at hiding the complexities of large bits of information, there’s no need for your blog, social network or application to use short URLs where there is no human entry factor involved.

Twitter is particularly guilty at abusing short URLs – part of this was originally historic, but when Twitter had the opportunity to fix, they chose to instead contribute further towards the problem.

Back in the early days of Twitter, there was no native URL handling, so in order to fit many links into the maximum tweet size of 140chars, users would use a URL shortener such as the classic tinyurl.com or more recent arrivals such as bit.ly to keep the URL lengths as small as possible.

Twitter later decided to implement their own URL shortening service called t.co and now enforce the re-writing of all URLs posted via Twitter to use t.co links, in a semi-transparent fashion where some/all of the original URL will be shown in the tweet, but the actual hyperlink will always go through t.co.

This change offers some advantage to users in that they were no longer dependent on external providers closing down and breaking all their links, as well as having some security advantages in that Twitter maintain lists of bad URLs (URLs they consider to serve malware or other unwanted content) to help stop the spread of dodgy content.

But it also gave Twitter the ability to track click data to figure out which links users were clicking on, I imagine this information would be highly valuable to advertisers. (Google do a very similar thing with the Google results web pages, where all clicks are first directed through a Google server to track what results users select, before the user is delivered to the requested page).

The now mandatory use of URL shorteners on Twitter has lead to a situation where it’s no longer easy to track which tweets or even, what tweeters, are leading to the source of your hits.

Even more confusingly, the handling of referred URLs is inconsistent depending on the browser/client following the link. The vast majority will log as the short URL version, but some will be smart enough to provide the referred URL *before* the referring took place.

RFC 2616 doesn’t touch on how shortened URLs should be handled when referring and leaves the issue of how 301 redirects should have their referrers handled up to the implementers decision. And their are valid arguments for using the original page vs the short URL as the referrer.

For example, for this tweet I have about 9 visits via http://twitter.com/jethrocarr/status/170112859685126145 and 29 visits via http://t.co/0RJteq3r, which throws out hit-count based ordering of the results:

Got to love Twitter & shortened URLs - most of these relate to tweets, but to which tweets? No easy way to track back.

A much better solution, would have been for twitter to display shortened versions of URLs in the tweet text to meet the 140 char limit, but the actual link href record featuring the full URL – for example, a tweet could have “jethrocarr.com/i-like-…..” as the link text to fit within 140 chars, but the actual href record would be the full “jethrocarr.com/i-like-cake” URL.

Whilst tweets are known as being 140 chars, there’s actually far more information than that sorted about each tweet: location co-ordinates, full URL information, date, time and more, so there is no excuse for Twitter to not be able to retain that URL data – of course, that information has value for them for advertising and tracking purposes, so I wouldn’t expect it to ever go away.

(As a side note, there’s an excellent write up on ReadWriteWeb about the structure of a tweet and associated information)

Over all, shortened URLs are just a pain for dealing with and it would be far better if people avoided them as much as possible, essentially if you’re using a short URL and it’s not because a user will be manually typing out content, then you’re doing it wrong.

Also keep in mind that many sites have their own shortish URL variations. For example, this article can be accessed via both date/name and ID number:

https://www.jethrocarr.com/2012/02/26/why-i-hate-url-shorteners

https://www.jethrocarr.com/?p=1453

Many people also run their own private shorteners, quite common with popular sites such as news websites wanting to retain control of the link process and is a much better idea if you plan to have lots of short URLs for your website for a valid reason.

Piwik is another good open source analytics package. Like Google, but open source.

I moved to it from awstats ages ago.

Just in case you weren’t aware of it.

Tim

Looks neat! Will have to take a further look at Piwik, probably not so useful for my blog, but certain useful for tracking visitors on some of my other sites and seeing how their browsing habits work.

Super stuff… what type of article management software do you make use

of? Do you publish and manage your articles by hand?

Just curious. Thank you!

This blog runs on a pretty stock-standard installation of WordPress. There’s a couple plugins used to push messages out to Twitter, but not a lot else.