Linux is a pretty hardy operating system that will take a lot of abuse, but there are ways to make even a Linux system unhappy and vengeful by messing with available resources.

I’ve managed to trigger all of these at least once, sometimes I even do it a few times before I finally learn, so I’ve decided to sit down and make a list for anyone interested.

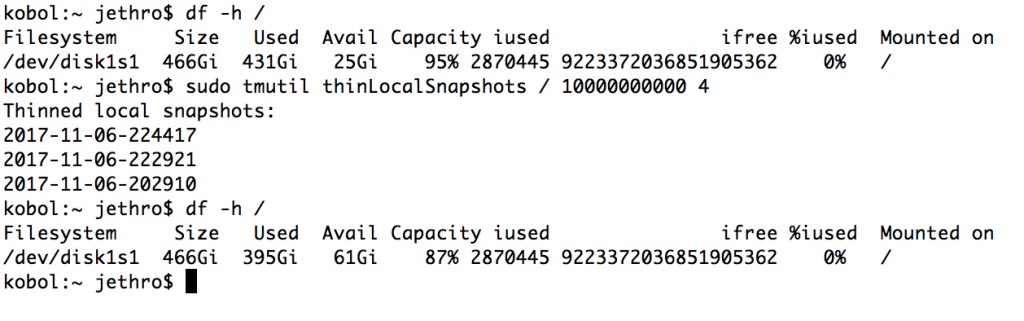

Disk Space

Issue:

Running out of disk. This is a wonderful way to cause weird faults with services like databases, since processes will block (pause) until there is sufficient disk space available again to allow writes to complete.

This leads to some delightful errors such as websites failing to load since the dynamic pages are waiting on the database, which in return is waiting on disk. Or maybe apache can’t write anymore PHP session files to disk, so no PHP based pages load.

And mail servers love not having disk, thankfully in all the cases I’ve seen, Sendmail & dovecot just halt and retain messages in memory without causing a loss of data. (although a reboot when this is occurring could be interesting).

Resolution:

For production systems I always carefully consider the partition table structure, so that an issue such as out-of-control logging processes or tmp directories can’t impact key services such as databases, by creating separate partitions for their data.

This issue is pretty easy to fix with good monitoring, packages such as Nagios include disk usage checks in the stock versions that can alert at configurable intervals (eg 80% of disk used).

Disk Access

Issue:

Don’t unplug a disk whilst Linux is trying to use it. Just don’t. Really. Things get really unhappy and you get to look at nice output from ps aux showing processes blocked for disk.

The typical mistake here is unplugging devices like USB hard drives in the middle of a backup process causing the backup process to halt and typically the kernel will spewing the system logs with warnings about how naughty you’ve been.

Fortunately this is almost always recoverable, the process will eventually timeout/terminate and the storage device will work fine on the next connection, although possibly with some filesystem errors or a corrupt file if halfway through writing to disk.

Resolution:

Don’t be a muppet. Or at least educate users that they probably shouldn’t unplug the backup drive if it’s flashing away busy still.

Networked Storage

Issue:

When using networked storage the kernel still considers the block storage to be just as critical as local storage, so if there’s a disruption accessing data on a network file system, processes will again halt until the storage returns.

This can have mixed blessings – in a server environment where the storage should always be accessible, halting can be the best solution since your programs will wait for the storage to return and hopefully there will be no data loss.

However for a mobile environment this can cause problems to hang indefinetly waiting for storage that might not be able to be reconnected.

Resolution:

In this case, the soft option can be used when mounting network shares, which will cause the kernel to return an error to the process using the storage if it becomes unavailable so that the application (hopefully) warns the user and terminates gracefully.

Using a daemon such as autofs to automatically mount and unmount network shares on demand can help reduce this sort of headache.

Low Memory

Issue:

Running out of memory. I don’t just mean RAM, but swap space (pagefile for you windows users). When you run out of RAM on almost any OS, it won’t be that happy – Linux handles this situation by killing off processes using the OOM in order to free up memory gain.

This makes sense in theory (out of memory, so let’s kill things that are using it), but the problem is that it doesn’t always kill the ones you want, leading to anything from amusement to unmanageable boxes.

I’ve had some run-ins with the OOM before, killing my ssh daemon on overloaded boxes preventing me from logging into them. :-/

One the other hand, just giving your system many GB of swap space so that it doesn’t run out of memory isn’t a good fix either, swap is terribly slow and your machine will quickly grind to a near-halt.

The performance of using swap is so bad it’s sometimes difficult to even log in to a heavily swapping system.

Resolution:

Buy more RAM. Ideally you shouldn’t be trying to run more than possible on a box – of course it’s possible to get by with swap space, but only to a small degree due to the performance pains.

In a virtual environment, I’m leaning towards running without swap and letting OOM just kill processes on guests if they run out of memory, usually it’s better to take the hit of a process being killed than the more painful slowdown from swap.

And with VMs, if the worst case happens, you can easily reboot and console into the systems, compared to physical hosts where you can’t afford to lose manageability at all costs.

Of course this really depends on your workload and what you’re doing, best solution is monitoring so that you don’t end up in this situation in the first place.

Sometimes it just happens due a once-off process and is difficult to always forsee memory issues.

Incorrect Time

Issue:

Having the incorrect time on your server may appear only a nuisance, but it can lead to many other more devious faults.

Any applications which are time-sensitive can experience weird issues, I’ve seen problems such as samba clients being unable to see newer files than the system time and having bind break for any lookups. Clock issues are WEIRD.

Resolution:

We have NTP, it works well. Turn it on and make sure the NTP process is included in your process monitoring list.

Authentication Source Outages

Issue:

In larger deployments it’s often common to have a central source of authentication such as LDAP, Kerberos, Radius or even Active Directory.

Linux actually does a remarkable amount of lookups against the configured authentication sources in regular operation. Aside from the need to lookup whenever a user wishes to login, Linux will lookup the user database every time the attributes of a file is viewed (user/group information) which is pretty often.

There’s some level of inbuilt caching, but unless you’re running a proper authentication caching daemon allowing off-line mode, a prolonged outage to the authentication server will make it impossible for users to login, but also break simple queries such as ls as the process will be trying to make user/group information lookups.

Resolution:

There’s a reason why we always have two or more sources for key network services such as DNS and LDAP, take advantage of the redundancy built into the design.

However this doesn’t help if the network is down entirely, in which case the best solution is having the system configured to quickly failover to local authentication or to use the local cache.

Even if failover to a secondary system is working, a lot of the timeout defaults are too high (eg 300 seconds before trying the secondary). Whilst the lookups will still complete eventually, these delays will noticely impact services, so it’s recommended to lookup the authentication methods being used and adjust the timeouts down to a couple seconds tops.

This is just a few of simple yet nasty ways to break Linux systems in ways that cause weird application behaviour, but not nessacarily in a form that’s easy to debug.

In most cases, decent monitoring will help you avoid and handle many of these issues better by alerting to low resource situations – if you have nothing currently, Nagios is a good start.