![]() I’ll be in Wellington for Kiwicon from Fri 8th to Tue 12th. If you’re coming to the conference, track me down and we’ll catch up there. If you’re not at the conference, I’ll be around town, so just drop me a message and I’ll be keen to say hi. :-)

I’ll be in Wellington for Kiwicon from Fri 8th to Tue 12th. If you’re coming to the conference, track me down and we’ll catch up there. If you’re not at the conference, I’ll be around town, so just drop me a message and I’ll be keen to say hi. :-)

Tag Archives: security

Varnish DoS vulnerability

The Varnish developers have recently announced a DoS vulnerability in Varnish (CVE-2013-4484) , if you’re using Varnish in your environment make sure you adjust your configurations to fix the vulnerability if you haven’t already.

In a test of our environment, we found many systems were protected by a default catch-all vcl_error already, but there were certainly systems that suffered. It’s a very easy issue to check for and reproduce:

# telnet failserver1 80 Trying 127.0.0.1... Connected to failserver1.example.com. Escape character is '^]'. GET Host: foo Connection closed by foreign host.

You will see the Varnish child dying in the system logs at the time:

Oct 31 14:11:51 failserver1 varnishd[1711]: Child (1712) died signal=6 Oct 31 14:11:51 failserver1 varnishd[1711]: child (2433) Started Oct 31 14:11:51 failserver1 varnishd[1711]: Child (2433) said Child starts

Make sure you go and apply the fix now, upstream advise applying a particular configuration change and haven’t released a code fix yet, so distributions are unlikely to be releasing an updated package to fix this for you any time soon.

Delicious Entropy

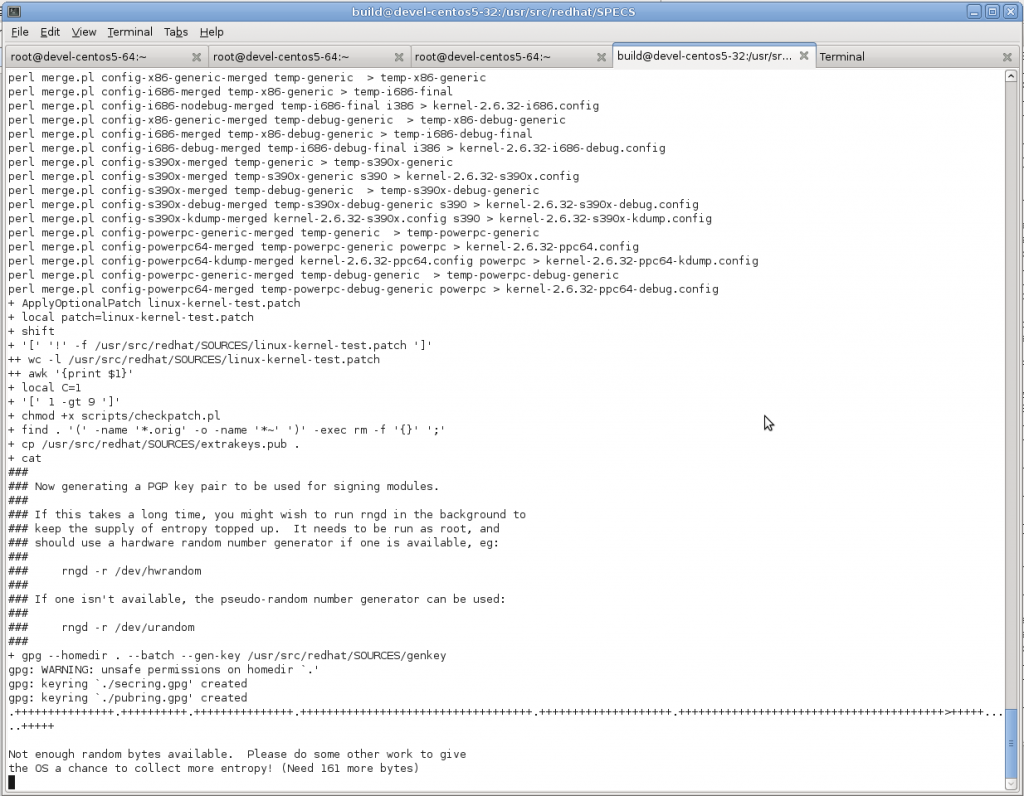

I run a large GNU/Linux server with KVM for running numerous virtual machine guests, including build hosts used to package and compile software for different GNU/Linux distributions and other operating systems.

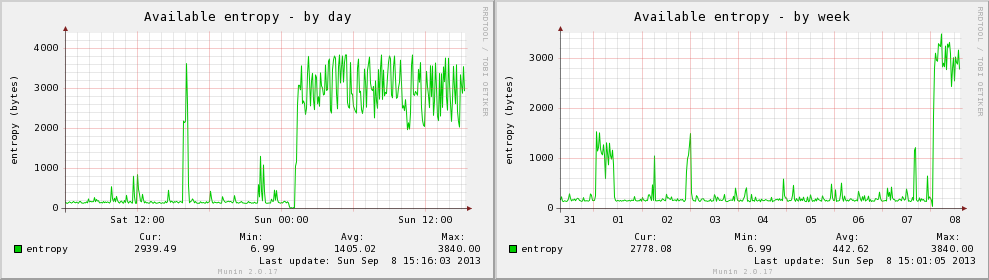

I recently ran into an issue during a kernel compile where the kernel compile hung indefinitely whilst GPG (tried) to sign kernel modules as part of the build process, due to the virtual machine guest running out of available entropy and being unable to proceed until more random data was available.

On Linux there are two sources of random data – /dev/random, which provides high quality random data and /dev/urandom which provides an unlimited amount of pseudo-random data based on a seed value taken from the random pool initially.

Linux generates this random data by collecting entropy from somewhat-random events, such as disk activity, network activity, keyboard, mouse and other sources. When the pool of entropy is exhausted, /dev/random will block (ie force processes to freeze) until more is available, whereas /dev/urandom will continue to serve continuous pseudo-random data, although the quality of the random data is not considered as secure as /dev/random.

On a workstation or single server this tends to be enough to generate sufficient random data for most applications (although if you’re doing certain tasks you may still have an issue). Virtual machines on the other hand, lack hardware sources of entropy such as disks or keyboards and it’s very easy to quickly exhaust the available entropy pool and have some applications block until more is available.

Applications like Apache (with mod_ssl) and OpenSSL use /dev/urandom so aren’t impacted by shortages of entropy, but some signing processes, such as GPG require /dev/random and can be impacted if the source of entropy is exhausted – which is exactly what happened to my kernel signing process.

It’s pretty easy to use to test and see how quickly a Linux system re-fills the entropy pool by running a test to read data from /dev/random, forcing the pool to empty and be repopulated.

# dd if=/dev/random of=/dev/null count=1000 0+1000 records in 16+1 records out 8496 bytes (8.5 kB) copied, 149.849 s, 0.1 kB/s

The host doing this test has around 12 physical hard disks, 10 active KVM virtual machines spewing out packets, an unfiltered WAN link feeding random junk – all which is good for generating a decent amount of entropy. The numbers may look pretty bad, but when compared with the amount of entropy generated by my laptop…

# dd if=/dev/random of=/dev/null count=1000 0+1000 records in 16+1 records out 8409 bytes (8.4 kB) copied, 1389.95 s, 0.0 kB/s

The rate of entropy generation on my laptop is quite depressing – but at least my laptop has a keyboard, mouse and hardware environmental values to help add sometime to the entropy sources.

When I run the same test on a virtual machine guest, which lacks all these physical sources, it comes to a grinding halt:

# dd if=/dev/random of=/dev/null count=10000 0+24 records in 0+0 records out 0 bytes (0 B) copied, 1865.68 s, 0.0 kB/s

I was forced to kill the above test due to it timing out indefinitely thanks to the host running out of any available entropy and being unable to generate any more to complete the test. :-(

Even when performing an intensive activity such as compiling a large software library, it still takes considerable time to complete this test on a VM:

# dd if=/dev/random of=/dev/null count=1000 0+1000 records in 15+1 records out 8018 bytes (8.0 kB) copied, 2560.36 s, 0.0 kB/s

It seems that the lack of the random data generated by active physical hardware is too much for the VM guest to be able to complete the test. And whilst some applications like an HTTPS website would continue to operate fine, others like a build host GPG-signing packages may fail and hang indefinitely, unable to obtain the required volume of random data to complete it’s key generation process.

For times when this lack of entropy becomes an issue for your applications, it is possible to obtain additional entropy from a hardware random number generator – this can be as simple as using a feed such as analog noise from the sound card or as sophisticated as a hardware random number generator or functionality built into certain CPUs which is designed to be extremely random and unpredictable.

A while ago I picked up a pair of Simtec Electronic’s Entropy Keys, a small USB device which generates truly random sources of data by a clever method of abusing semiconductors and connected one to my primary KVM servers.

The device ships with an open source daemon that takes random data from the key and injects it into the Linux entropy pool for use by all /dev/random using applications. It instantly makes a huge difference to the available volume by generating almost 3.9KB/s of random data.

After starting the daemon and re-running the test, the performance looks much better:

# dd if=/dev/random of=/dev/null count=1000 0+1000 records in 145+1 records out 74504 bytes (75 kB) copied, 21.8926 s, 3.4 kB/s

The numbers are still low, but the reality is you generally you only need a few bytes at a time, rather than massive volumes like this test demands – for general signing usage, 3.4kB/s is a huge volume to have.

So whilst this test doesn’t reflect the real way /dev/random is used, it does illustrates the difference in data volume a proper random number generator can make. And whilst this might not be a common problem thanks to the low volume of random data required for most applications to function, the increasing use of virtualisation makes this issue possibly one that people may bump into more in future.

Now that I have my host server getting a reliable and steady flow of random data, my next step is to share that data to the virtual machines running on the host – as I’m doing all my signing in guests, it’s vital that I get that random data through to them,

I’m in the process of investigating a few different options and will cover these in a follow up blog post, as it’s a somewhat sizeable topic in it’s own right.

WordPress & SSL Fixes

I’ve been using WordPress for this blog for a number of years now – at some point I realised that whilst writing my own code is fun, there’s no need to reinvent yet-another-fucking-blog-platform and ended up selecting WordPress to use for my content, on the basis of it’s strong and active development and community.

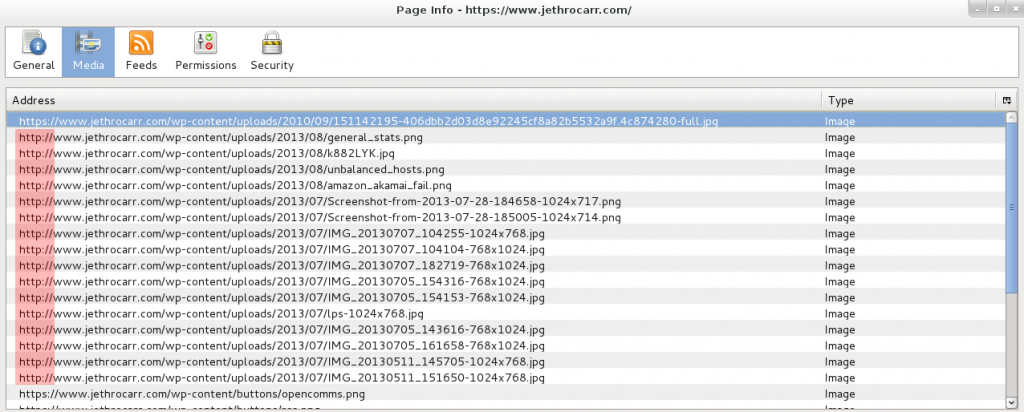

Generally it’s pretty good, but there are times it disappoints, such as WordPress expecting servers to have FTP for unpacking updates and plugins (it’s 2013 guys, SFTP at least!), excessively setting cookies which makes caching layers more complex and doing stupid stuff with storing full URLs inside the database for page links and image resources.

The latter has been impacting me in particular. Visitors to my site have had the option of using HTTP or HTTPS (SSL secured) access methods for some time, but annoyingly whenever I posted an article with images, WordPress includes all the images using http://. This mixed content type prevents browsers from showing the lock icon (best case) or throws up a nasty error (worst case) depending on the browser and it’s level of concern for user safety for mismatched content.

I could work around this by setting the WordPress base URL for my site to be https://www.jethrocarr.com, but then images served at the unsecured http:// site would also be served via SSL, which is just adding pointless load to the server (not that SSL termination really adds much load these days, but damnit, I’m being a purist here!).

I was hoping that it was a misconfiguration of my WordPress setup, but reading online it seems that this is a known issue with WordPress and a whole bunch of modules, hacks and themes have sprung up to fix/workaround the issue…

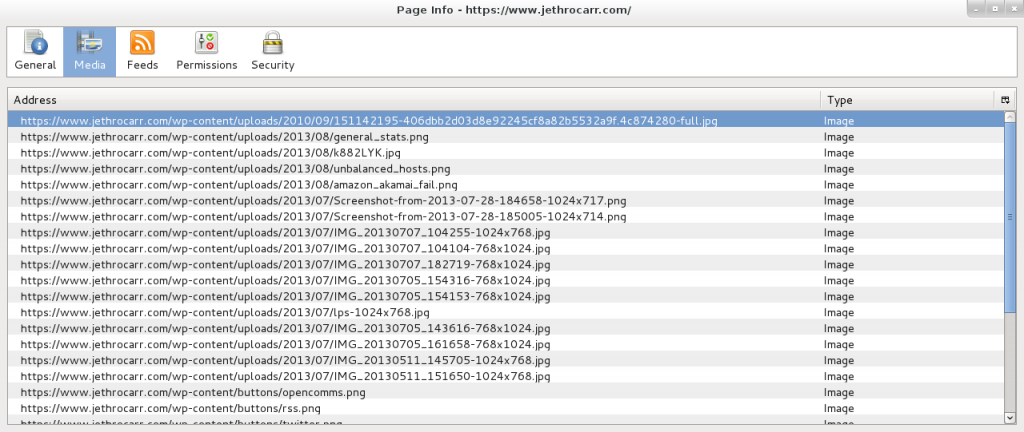

Of course there’s an easier way – fix it at the webserver layer! Both Nginx and Apache have modules to do substitutions in page content on load, for Nginx there’s HttpSubModule and for Apache there is mod_substitute. In my case with stock Apache 2.2 on CentOS 5, I was able to fix the whole issue by adding the following to my SSL vhost configuration:

# Fix SSL URLs thanks to WordPress hardcoding http:// links to images :'( <Location /> AddOutputFilterByType SUBSTITUTE text/html Substitute "s|http://www.example.com|https://www.example.com|" </Location>

Following this, things look much better:

Technically this substitution will have some level of performance impact, as it has to process the generated HTML content and check for strings to replace, but the impact is so low that I wasn’t able to measure it amongst the usual variation of page response times – and it’s not going to be anywhere as slow as mod_php and WordPress itself anyway. ;-)

Finally, if you haven’t already, you probably want to change the following in wp-config.php:

define('FORCE_SSL_ADMIN', true);

This forces all WordPress logins and wp-admin activities to take place under HTTPS which is a pretty good idea if you ever post to your blog from an unsecured network.

SSL Intermediate CA Bundles with Amazon

When configuring SSL services, generally you need to set a certificate, a private key and the CA bundle containing the intermediate certificate(s), which is often a bundle of several different certificates.

For example, https://www.jethrocarr.com‘s configuration looks like:

SSLEngine on SSLCertificateFile jethrocarr.com.crt SSLCertificateKeyFile jethrocarr.com.key SSLCertificateChainFile startssl.intermediate.ca.crt

When your browser connects, it doesn’t trust jethrocarr.com.crt, but it checks it against the certificates that have signed it in startssl.intermediate.ca.crt – and those certificates are signed by the CA that your browser trusts.

This means that the CAs can protect their root certificates which are trusted by the browser much more securely and sign their certificates with intermediates than can be revoked and easily(ish) replaced should the need arise.

Generally this works fine from the end user perspective, although there are sometimes issues when an sysadmin forgets to add the intermediate CA bundle and doesn’t immediately notice as sometimes some browsers work fine whilst others fail depending whether or not they already trust the intermediates.

Today I ran into a different new issue where Amazon Web Services is fussy about the order of the certificates in that bundle when adding a certificate to an Elastic Load Balancer for SSL termination.

Any attempt to upload my certificate was met with “Invalid Public Key Certificate”, which didn’t make a lot of sense as I was certain that my certificates were OK. It was easy to verify and prove this, using OpenSSL:

$ openssl rsa -noout -modulus -in example.com.key | openssl md5 (stdin)= 30e1b6cb4168117b7923392ca536c701 $ openssl x509 -noout -modulus -in example.com.crt | openssl md5 (stdin)= 30e1b6cb4168117b7923392ca536c701 $ openssl verify -verbose -CAfile cabundle.crt example.com.crt example.com.crt: OK

This proved that my certificates were all correct so the fault was Amazon-side. A post on their forums helped me “fix” the issue, by adjusting the order of my CA bundle, which subsequently fixed the error.

So is this a bug with Amazon? It’s tricky to say – there are several posts online which state that the order is important for some systems, but not for all. Clearly anything based around OpenSSL doesn’t care, as it was able to verify my out-of-order CA bundle happily enough.

As one does with issues like this, I dug into RFC 3280 which details how the certificate path validation should occur. Section 6.1 (Basic Path Validation) details that the path validation process is actually outside the specification, but then goes on and defines how the validation could occur, with the order of the certificates being implied, but not stated outright.

The primary goal of path validation is to verify the binding between

a subject distinguished name or a subject alternative name and

subject public key, as represented in the end entity certificate,

based on the public key of the trust anchor. This requires obtaining

a sequence of certificates that support that binding. The procedure

performed to obtain this sequence of certificates is outside the

scope of this specification.

To meet this goal, the path validation process verifies, among other

things, that a prospective certification path (a sequence of n

certificates) satisfies the following conditions:

(a) for all x in {1, ..., n-1}, the subject of certificate x is

the issuer of certificate x+1;

(b) certificate 1 is issued by the trust anchor;

(c) certificate n is the certificate to be validated; and

(d) for all x in {1, ..., n}, the certificate was valid at the

time in question.

Following the above, the specification goes on into detail different ways the path can be validated, which also imply that the certificates should be read in and then sorted by software, but it doesn’t actually state exactly.

Sadly with the way that this specification is written it’s not clear, which means the only 100% certain way to ensure nothing is unhappy is to order the CA bundles file in the correct order, which is something I would expect the SSL provider to do when they provide you with the files.

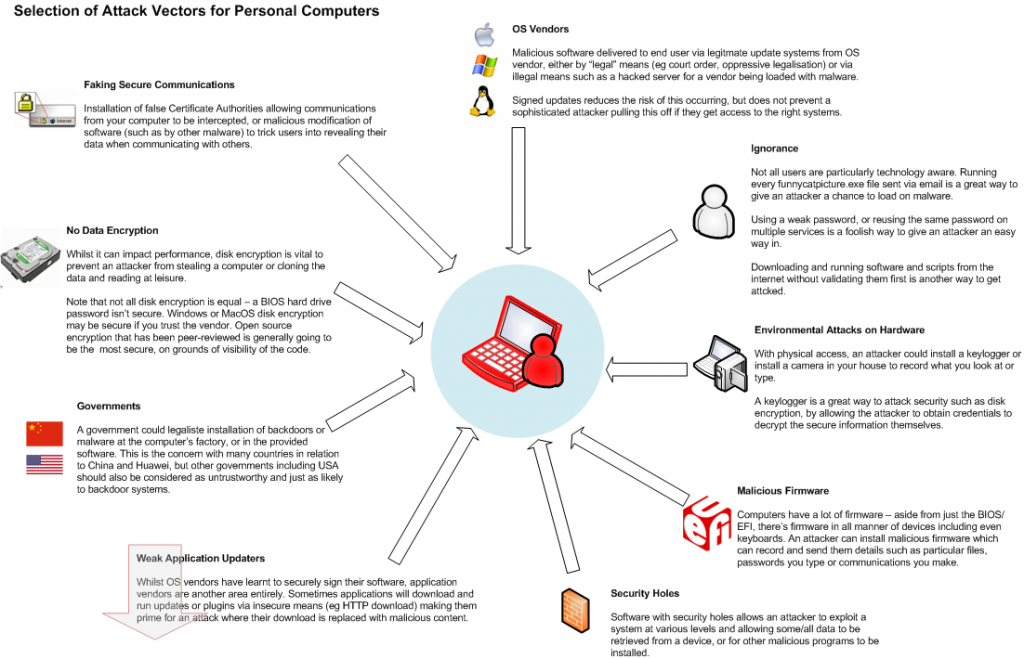

Attack vectors for personal computers

I’ve put together a (very) simplified overview of various attack vectors for an end user’s personal computer. For a determined attacker with the right resources, all of the above is potentially possible, although whether an attacker would go to this much effort, would depend on the value of the data you have vs the cost of obtaining it.

By far the biggest risk is you, the end user – strong, unique passwords and following practices such as disk encryption and not installing software from questionable vendors is the biggest protection from a malicious attacker and will protect against most of the common attacks including physical theft and remote attacks via the internet.

Where is gets nasty is when you’re up against a more determined attacker who can get hardware access to install keyloggers, can force a software vendor to push a backdoored software patch to your system via an update channel (ever wondered what the US government could make Microsoft distribute via Windows Update for them?), or has the knowledge on how to pull of an advanced attack such as putting your entire OS inside a hypervisor by attacking UEFI itself.

Of course never forget the biggest weakness – beating a user with a wrench until they give up their password is a lot cheaper than developing a sophisticated exploit if someone just wants access to some existing data.

Why SSL is really ISL

Secure transmission of data online is extremely important to avoid attackers intercepting data or claiming to be a site that they are not. To provide this, a technology called SSL/TLS (and commonly seen in the form of https://) was developed to provide security between an end user and a remote system to guarantee no interception or manipulation of data can occur during communications.

SSL is widely used for a huge number of websites ranging from banks, companies, and even this blog, as well as other protocols such as IMAP/SMTP (for Email) and SSH (for remote server shell logins).

As a technology, we generally believe that the cryptography behind SSL is not currently breakable (excluding attacks against some weaker parts of older versions) and that there’s no way of intercepting traffic by breaking the cryptography. (assuming that no organisation has broken it and is staying silent on that fact for now).

However this doesn’t protect users against an attack by the attacker going around SSL and attacking at other weak points outside the cryptography, such as the validation of certificate identity or by avoiding SSL and faking connections for users which is a lot easier than having to break strong cryptography.

Beating security measures by avoiding them entirely

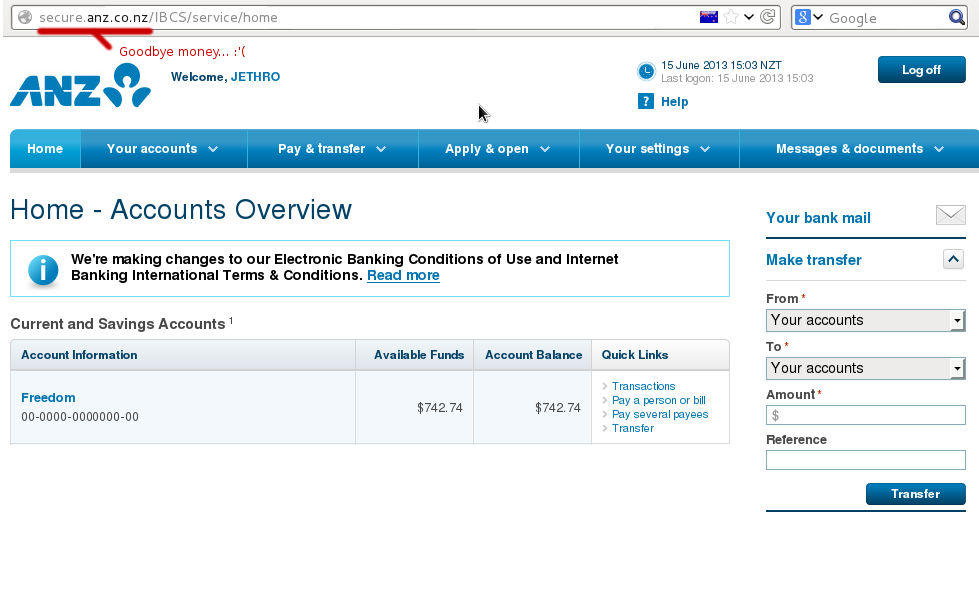

It’s generally much easier to beat SSL by simply avoiding it entirely. A technology aware user will always check for the https:// URL and for the lock symbol in their browser, however even us geeks sometimes forget to check when in a hurry.

By intercepting a user’s connection, an attacker can communicate with the secure https:// website, but then replay it to the user in an unencrypted form. This works perfectly and unless the user explicitly checks for the https:// and lock symbol, they’ll never notice the difference.

Anyone on the path of your connection could use this attack, from the person running your company network, a flatmate on your home LAN with a hacked router or any telecommunications company that your traffic passes through.

However it’s not particularly sophisticated attack and a smart user can detect when it’s taking place and be alerted due to a malicious network operator trying to attach them.

“Trusted” CAs

Whilst SSL is vendor independent, by itself SSL only provides security of transmission between yourself and a remote system, but it doesn’t provide any validation or guarantee of identity.

In order to verify whom we are actually talking to, we rely on certificate authorities – these are companies which for a fee validate the identify of a user and sign an SSL certificate with their CA. Once signed, as long as your browser trusts the CA, your certificate will be accepted without warning.

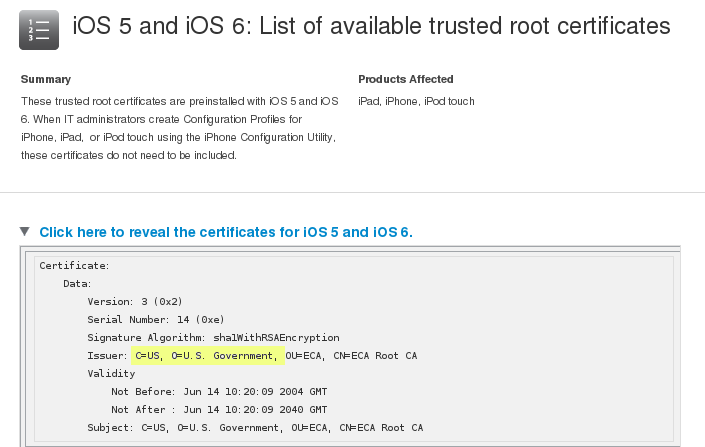

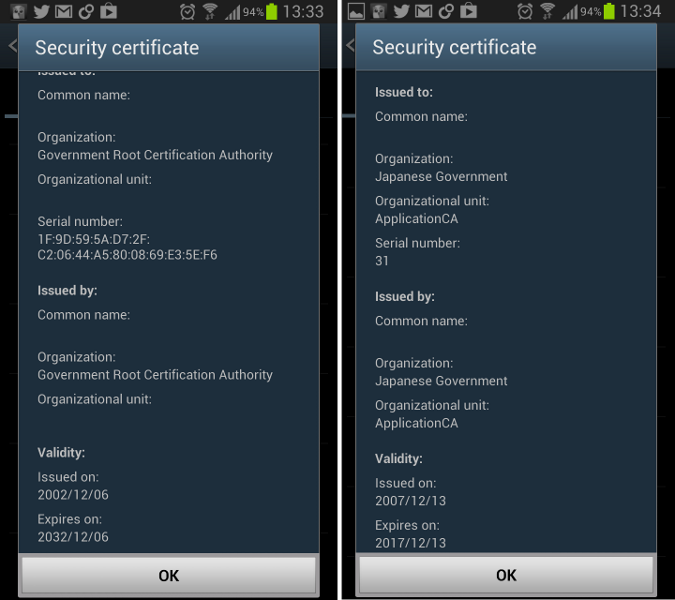

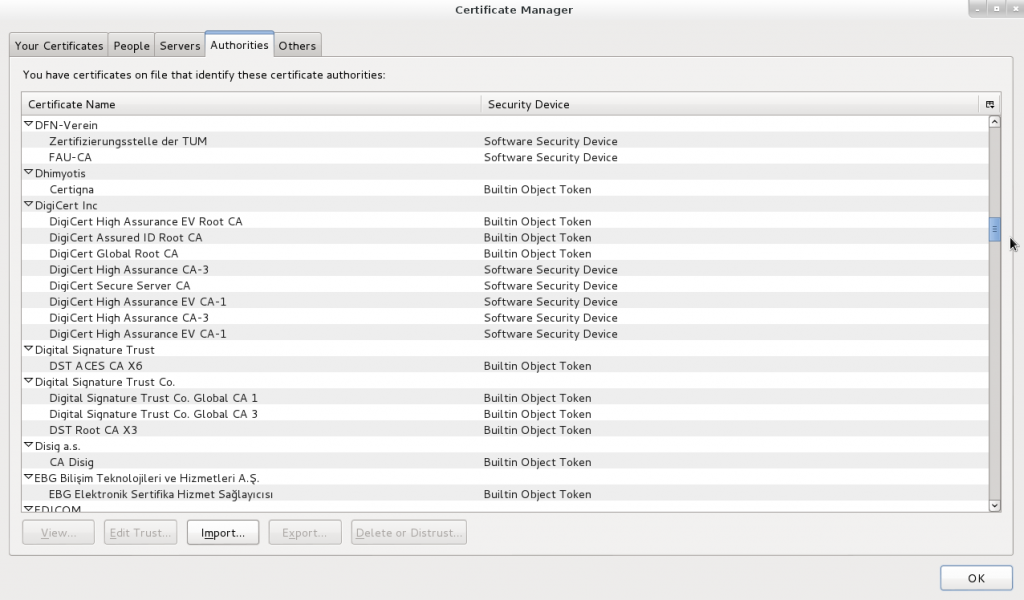

Your computer and web browsers have an inbuilt list of hundreds of CAs around the world which you trust to be reliable validators of security – as long as none of these CAs sign a certificate that they shouldn’t, your system remains secure. If any one of these CAs gets broken into or ordered by a government to sign a certificate, then an attacker could fake any certificate they want to and intercept your traffic without you ever knowing.

I sure hope all these companies have the same strong validation processes and morals that I’d have when validating certificates…

You don’t even need to be a government to exploit this – if you can get access to the end user device that’s being used, you can attack it.

A system administrator in a company could easily install a custom CA into all the desktop computers in the company By doing this, that admin could then generate certificates faking any website they want, with the end user’s computer accepting the certificate without complaint.

The user can check for the lock icon in their browser and feel assured of security, all whilst having their data intercepted in the background by a malicious admin. This is possible due to the admin-level access to the computer, but what about external companies that don’t have access to your computer, such as Internet Service Providers (ISPs) or an organisation like government agencies*? (* assuming they don’t have a backdoor into your computer already).

An ISP’s attack options are limited since they can’t fake a signed certificate without help from a CA. There’s also no incentive to do so, stealing customer data will ensure you don’t remain in business for a particularly long time. However an ISP could be forced to do so by a government legal order and is a prime place for the installation of interception equipment.

A government agency could use their own CA to sign fake certificates for a target they wish to intercept, or force a CA in their jurisdiction to sign a certificate for them with their valid trusted CA if they didn’t want to give anything away by signing certificates under their organisation name. (“https://wikileaks.org signed by US Government” doesn’t look particularly legit to a cautious visitor, but would “https://wikileaks.org signed by Verisign” raise your suspicions?)

If you’re doubting this is possible, keep in mind that your iOS or Android devices already trust several government CAs, and even browsers like Firefox include multiple government CAs.

It’s getting even easier for a government to intercept if desired – more and more countries are legislating lawful interception requirements into ISP legalisation, requiring ISPs to intercept a customer’s traffic and provide it to a government authority upon request.

This could easily include an ISP being legally required to route traffic for a particular user(s) through a sealed devices provided by the government which could be performing any manner of attacks, including serving up intercepted SSL certs to users.

So I’m fucked, what do I do now?

Having established that by default, your SSL “secured” browser is probably prime for exploit, what is the fix?

Fortunately the trick of redirecting users to unsecured content is getting harder with browsers using methods such as Extended Validation certs to make the security more obvious – but unless you actually check that the site you’re about to login to is secure, all these improvements are meaningless.

Some extensions such as HTTPS Everywhere have limited whitelists of sites that it knows should always be SSL secured, but it’s not a complete list and can’t be relied on 100%. Generally the only true fix for this issue, is user education.

The CA issue is much more complex – one can’t browse the web without certificate authorities being trusted by your browser so you can’t just disable them. There are a couple approaches you could consider and it really depends whether or not you are concerned about company interception or government interception.

If you’re worried about company interception by your employer, the only true safe guard is not using your work computer for anything other than work. I don’t do anything on my work computer other than my job, I have a personal laptop for any of my stuff and in addition to preventing a malicious sysadmin from installing a CA, by using a personal machine also gives me legal protections against an employer reading my personal data on the grounds of it being on a company owned device.

If you’re worried about government interception, the level you go to protect against it depends whether you’re worried about interception in general, or whether you must ensure security to some particular site/system at all cost.

A best-efforts approach would be to use a browser plugin such as Certificate Patrol, which alerts when a certificate on a site you have visited has changed – sometimes it can be legitimate, such as the old one expiring and a new one being registered, or it could be malicious interception taking place. It would give some warning of wide spread interception, but it’s not an infallible approach.

A more robust approach would be to have two different profiles of your browser installed. The first for general web browsing and includes all the standard CAs. The second would be a locked down one for sites where you must have 100% secure transmission.

In the second browser profile, disable ALL the certificate authorities and then install the CAs or the certificates themselves for the servers you trust. Any other certificates, including those signed by normally trusted CAs would be marked as untrusted.

In my case I have my own CA cert for all my servers, so can import this CA into an alternative browser profile and can safely verify that I’m truly taking to my servers directly, as no other CA or cert will ever be accepted.

I’m a smart user and did all this, am I safe now?

Great stuff, you’re now somewhat safer, but a determined attacker still has several other approaches they could take to exploit you, by going around SSL:

- Malicious software – a hacked version of your browser could fake anything it wants, including accepting an attackers certificate but reporting it as the usual trusted one.

- A backdoored operating system allows an attacker to install any software desired. For example, the US Government could legally force Apple, Google or Microsoft to distribute some backdoor malware via their automatic upgrade systems.

- Keyboard & screen logging software stealing the output and sending it to the attacker.

- Some applications/frameworks are just poorly coded and accept any SSL certificate, regardless of whether or not it’s valid, these applications are prime to exploit.

- The remote site could be attacked, so even though your communications to it are secured, someone else could be intercepting the data on the server end.

- Many, many more.

The best security is awareness of the risks

There’s never going to be a way to secure your system 100% – but by understanding some of the attack vectors, you can decide what is a risk to you and make the decision about what you do and don’t need to secure against.

You might not care about any of your regular browsing being intercepted by a government agency, in that case, keep browsing happily.

You might be worried about this since you’re in the region of an oppressive regime, or are whistle-blowing government secrets, in which case you may wish to take steps to ensure your SSL connections aren’t being tampered with.

And maybe you decided that you’re totally screwed and resort to communicating by meeting your friends in person in a Faraday cage in the middle of nowhere. It all comes down to the balance of risk and usability you wish to have.

PRISM Break

The EFF has put together a handy website for anyone looking to replace some of their current proprietary/cloud controlled systems with their own components.

You can check our their guide at: http://prism-break.org/

Generally it’s pretty good, although I have concerns around a couple of their recommendations:

- DuckDuckGo is a hosted proprietary service, so whilst they claim to not track or record searches, it’s entirely possible that they could be legally forced to do so for a particular user/IP address and have a gag order on that. Having said this, it sounds like they’re the type of company that would push back against such requests as much as possible.

- Moving from Gmail to something like Riseup is just replacing one centralised provider with another, it doesn’t add any additional protection against PRISIM.

As always, the only truly secure (excluding security bugs etc) is one you control entirely. If a leak of your data must be avoided at all costs, you need to be running a server.

IRD online services registration

I recently signed up with IRD’s (New Zealand’s Tax Department) online Kiwisaver service, so I could view the status of my payments and balance of New Zealand’s voluntary superannuation scheme.

The user sign up form is pretty depressing (and no, not just because it’s about signing up to tax rather than cool stuff):

My first concern is passwords being limited to a maximum of 10 characters, it’s way too short for many good passwords (or even better, passphrases), any system should take at least 255 chars without complain.

Secondly, the “forgotten password phrase” is the most stupid thing I’ve ever seen, it’s basically a second password field – if you forget your password, you can contact them and give them this second password…. except that if you’re stupid enough to forget the first password, how the hell are you going to remember a secondary normally never-used password?

I’d also love to know how secure the secondary password phrase requirements are, because since it gives you access into the account, the security is no stronger than whatever you put in here – and how likely are users to choose something good and secure as their “backup phrase”?

This is some pretty simple security concepts and I’m a bit dismayed that IRD managed to get these so wrong – at least it shouldn’t be hard to correct….

Pimping my ride with high pitch painful sounds

I got my car back from the repair shop on Friday following it’s run in with the less pleasant residents of Auckland, with all the ignition and dash repaired.

Unfortunately the whole incident costs me at least $500 in excess payments, not to mention future impacts to my insurance premiums, so I’m not exactly a happy camper, even though I had full insurance.

Because I really don’t want to have to pay another $500 excess when the next muppet tries to break into it, I decided to spend the money to get an alarm installed, to deter anyone trying to break in again – going to all the effort to silence an alarm for a 1997 Toyota Starlet really won’t be worth the effort, sending them on to another easier target.

(I did consider some of those fake stickers and a blinky LED, but a real alarm does mean that if you hit the car, you’ll quickly get a chirp confirming there is an alarm present. Plus I get one of those chirpy remote controls to unlook the doors! :-D)

I do really hate car alarms, but it’s worth it to have something that will send anyone messing with my car running before it wakes up half the apartment complex.

I wanted to get a decent alarm installed properly and ended up getting referred to Mike & Lance at www.carstereoinstall.co.nz who do onsite visits to install which was really handy, and totally worth it after seeing all the effort needed to do the installation.

Car electronics spaghetti! Considering this is a pretty basic 1997 car, I'd hate to think what the newer ones are like...

There’s a bit of metal drilling, cable running, soldering, un-assembling parts of the car’s interior and trying to figure out which cables control which features of the car, all up it took two guys about 2 hours to complete.

Cost about $325 for the alarm and labor, plus an extra $40 as they had to run wires and install switches for the boot, which is pretty good when you consider it’s a 4 man hour job, would have taken all day if I’d done it at noob pace.

Would recommend these guys if you’re in Auckland. As an extra bonus, Mike turned out to be an ex-IT telco guy so we had some interesting chats – NZ is such a small world at times :-/