I don’t often do a heap of hands on stuff these days as a manager, hence the lack of tech blog posts in the past… 5+ years, but I’ve been digging into LLMs a bunch lately, especially in the area of agentic code (“vibe coding”) to see what the state of the technology is.

I’d describe myself as an AI realist, I can see the massive capabilities of the technology and use it daily, but I also don’t fully buy the hype that we’ll have thought leaders vibe coding entire applications without understanding the technology anytime soon. A capable understanding of what the heck it’s doing and what to instruct it to do is a key requirement IMHO. That said, I like to regularly challenge my assumptions around technology and nothing beats that than getting hands on with it.

I’ve used cloud based frontier models like GPT-4.1, Claude, etc. These have been surprisingly good when doing greenfield development, especially in well understood domains like web SaaS development coupled with clear requirements in prompts.

One challenge I can see is the rate of token consumption, especially once the current VC-fueled loss leader era runs dry and we’re actually paying for the GPU time consumed. So wanted to run some tests with local LLMs and see what’s possible there with the technology available today.

To be clear, I didn’t expect local to beat big cloud, but I was interested to see exactly how far behind the local options are in terms of hardware+model capabilities.

The Setup

I picked a simple example – an old Lambda I wrote back in 2017 and have basically not maintained since as it’s feature complete and AWS let me keep on using the node14 runtime. (The day Amazon introduces extended support pricing for older Lambda runtimes will be a tough day for me 😅).

This is ideal for a test of refactoring legacy code:

- Old JS standards and library choices

- Old v2 AWS SDK

- Last built on Node 14

- Using early pre-release version of the Serverless framework.

- Simple “one file” app that shouldn’t tax any models with too much complexity.

For the test I enrolled my Windows gaming machine for the task. Unfortunately the AMD RX580 8GB GPU it had, whilst great for my basic gaming needs, is pretty unloved by the AI development community and wasn’t going to cut it (side note: this GPU is abandoned in ROCM but you can get it to run with llama.cpp using Vulkan, so it’s not incapable, just not the prime choice in an ecosystem that is extremely NVIDA centric. The vRAM size is also pretty small vs the sizes needed these days).

Given the GPU issues, I upgraded it to an 5060ti, which is the most cost effective option I could get ($1000 NZD / ~$590 USD) that could run models reasonably well without breaking the bank. To get more vRAM, I’d end up needing a 5090 which comes in a whopping $5000 NZD / ~$3000 USD which is a bit more than I’d like to spend on this experiment.

End result is a machine with:

- Windows 11 Home edition

- AMD Ryzen 7 3700X

- 32GB DDR4

- NVIDIA RTX 5060 Ti 16GB

For software, I ran Ollama directly on the host OS and exposed the connection to my Macbook where I was running development tooling and using vscode as the editor.

One challenge is that vscode’s Copilot extension on paper offers support for Local LLM models as well as the cloud models, but Microsoft intentionally blocks them if you’re getting your Copilot account via a business plan of any kind because F you I guess. I worked around this with a tweak to one line of code and built the extension locally, but ended up needing to abandon it and use the Continue.dev extension due to issues with Ollama + CoPilot extension not working together correctly to handle tools with the model, which I was able to work around mostly with Continue.dev’s more flexible options.

Test 1: qwen3-coder:30b

See the PR at https://github.com/jethrocarr/lambda-ping/pull/9 for the full notes, prompts and challenges.

This model is a bit above what my hardware could handle. The model itself is 19GB, but with the larger context windows for agentic workloads, I was consuming almost 26GB of memory which forced it to blend both CPU+RAM, and GPU to operate the model which impacted processing performance considerably.

Because of this, the generation was super slow, it took me a couple hours to complete the project with waiting time for the various prompt runs. Unworkable as a normal workflow. To run this workload, really need 32GB vRAM like an expensive RTX 5090 with 32GB, an (also expensive) Mac Studio with unified memory, or maybe the new framework desktop with it’s AMD AI Max 395+ chip and unified memory.

This model generated valid code, but it wasn’t particularly amazing work. It introduced a bug where only HTTPS requests would work (breaking HTTP) and required a prompt to fix, which it did with making a conditional to handle HTTP vs HTTPs. It also didn’t adopt AWS SDK v3 until specifically instructed to do so.

Test 2: qwen2.5-code:14b

See the PR at https://github.com/jethrocarr/lambda-ping/pull/10 for the full notes, prompts and challenges.

A smaller 9GB model that in theory fits entirely within my GPU, but with the context size, actually took around 19GB forcing again a blend of CPU+RAM and GPU for the task. That said, it was considerably faster with 78% of the workload running inside the GPU and not materially worse than the previous test for this specific coding scenario.

This model also generated valid code although it too had a bug, this time with not tracing duration of the request so was non functional on it’s first iteration. When prompted, it was able to correct.

Test 3: qwen2.5-coder:7b

See the PR at https://github.com/jethrocarr/lambda-ping/pull/11 for the full notes, prompts and challenges.

A smaller model yet again, this one fits entirely within the GPU even with the large context and runs super fast thanks to this. Unfortunately the model is just too simplistic and was unable to build a functional application.

I would describe it as capable of writing “code shaped text”. It technically writes valid Javascript but it just hallucinates up different libraries and mashes library names together. It’s also too simplistic to run properly agentically, so it ended up with me copying it’s output into the editor for each file it generated.

Test 4: Cloud LLM using Copilot and GPT-41.

See the PR at https://github.com/jethrocarr/lambda-ping/pull/12 for the full notes, prompts and challenges.

I was originally intending to use Copilot with a few different models so started with the lesser premium GPT-4.1 and… it just hit it right out of the gate immediately, no point even looking at the others. It essentially took a single prompt to refactor the JS into modern standards, moved to AWS-SDK v3 without prompting and the only other prompts were to clean up some old deps it no longer needed and adjust the node runtime for Lambda.

I did follow up with a stretch goal of trying to move it from Serverless to AWS SAM and it struggled a bit more here. I guess LLMs are a bit like many developers and struggle with devops/infrastructure tasks, so maybe devops has a bit more job security for a while… Essentially it got about 70% of the way there but had some mistakes with IAM policies and wrote files into some weird paths that needed moving around.

Learnings

- Hardware and affordability is a big challenge to do serious agentic work locally. The hardware exists, but maybe not at a price most are willing to pay, specially when there’s a heap of cloud offerings right now at suspiciously cheap prices. Whether this can be sustained and what it ends up costing is yet TBD, there’s a lot of speculation about what the true operating costs are and pricing models/plans seem to change every 6 months as companies figure out what works.

- Ultimately if the value is there for the customer, the price being charged by cloud could scale somewhat infinitely. $200/mo for Cursor for a hobbyist is a lot, for a professional development shop, it’s chump change vs labour costs. If AI can make a developer 2x more effective and costs $2,000 /mo? Still a bargain vs labour costs for a business.

- Local LLMs are a hedge against the above, if a cloud vendor gets too greedy, at certain levels it becomes more cost effectively to lease or buy raw GPU power to run your own models. Of course there’s the issue of NVIDA having a defacto monopoly on AI capable GPU hardware these past few years, but AMD seems to be catching up and bringing out great new products like Strix Halo, enabling products like the Framework Desktop with an AMD AI MAX 395+ with up to 128GB of RAM. It might not have the fastest GPU cores ever, but it sure has a heap of unified memory to give those GPU cores a seriously massive pool of RAM. And of course Apple’s M-series architecture delivers similar capabilities, at a price premium.

- Time solves the above, the local LLMs feel like where ChatGPT was a few years ago. And computer hardware goes through periods where the technology is extremely expensive before trickling down to the consumer, given a few more years, it’s fesible that we could see GPT-4.1 performance capabilities from affordable commodity hardware.

- When factoring in memory size, it’s not just the size of the model, it the model + all the context size. Can easily double the effective working memory required making the hardware needed even more expensive.

- All the models struggled with the more infra/devopsy type workload of needing to update a legacy deployment framework. I think this hints at a bit of the technology limitations right now, this specific scenario won’t have much reference material online to have trained on and requires somewhat specific understanding of the tool and AWS ecosystem. As a former devops and Linux nerd this gives me the warm and fuzzies about job security… until next year’s release of AI lifts the bar again and can just talk directly to AWS or something.

- Windows is surprisingly… less trash than it used to be. Some key bits:

- WSL 2 (Linux on Windows) works, and most importantly for this topic, has GPU passthrough support so you can run CUDA workloads on a Linux environment using the NVIDA hardware on the host itself. This is a massive win for anyone doing any kind of AI experimentation but not wanting to dual boot their gaming box or just not comfortable setting up Linux as a lab OS.

- Windows includes OpenSSH server now. Yeah I was surprised to. In true windows fashion it’s a bit clunky but you can now SSH into powershell once it’s setup. I haven’t yet been able to get SSH keys to work so it’s password auth only right now.

- Docker on Windows uses the WSL subsystem so again, has GPU access.

- WinGet gives windows a half usable package manager.

- If you enable SVM virtualization and your Windows install just immediately bluescreens at boot, make sure mini dump is enabled and you can capture some kernel dumps and open it up to review. In my case, there was a legacy driver that crashed when running under hyper v that I was able to isolate and delete. Reading online, a lot of folks seem to resort to doing a windows reinstall to fix issues with being unable to enable SVM.

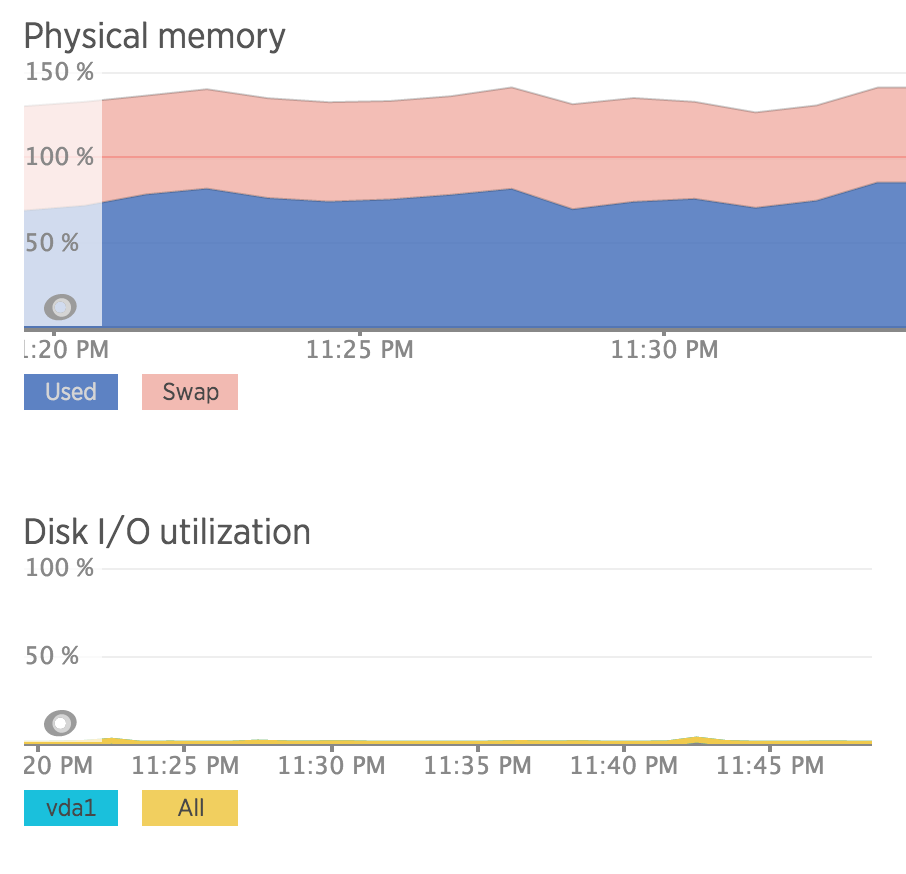

- WSL is a little funky with grabbing a lot of RAM for itself, for someone like myself running a mix of workloads in containers and a on the host OS, I may need to startup/shutdown when changing mode (eg AI lab vs Gaming) as WSL doesn’t dynamically claw back RAM that well so you end up with a Linux VM holding onto 10GB of RAM for 1GB of idle workloads.

Rabbit Hole: Hardware

I haven’t really kept up with hardware in a major way in the past few years, so it was interesting researching ways to run local LLM workloads. ChatGPT is actually very good with an encyclopaedic knowledge of CPUs and chipset PCIe lane setups, and there is a heap of real world reports on Reddit of different hardware setups. Few things I learnt:

- My AM4 motherboard has a 16x PCIe slot, but it’s a PCIe 3.0 slot. The NVIDIA RTX 5060 Ti is a PCIe 5.0 card but only 8x. This is fine on PCIe 5.0 where the bus is fast but when running at 3.0 speed, it can bottleneck for AI as the effective bandwidth is 8GB/s vs what could be 32 GB/s. It doesn’t impact gaming which doesn’t get close to this much bandwidth, and it doesn’t impact a single model running entirely in GPU, but it does impact any models spilling to CPU+RAM like in my case when doing agentic workloads with large contexts.

- Consumer AMD and Intel CPUs don’t actually have heaps of PCIe lanes available. For example, AM5 offers 28 lanes which sounds like a heap, until you realise 16x goes directly to the GPU, 4x to the NVMe slot, 4x to the chipset and 4x general purpose (additional NVMe or expansion 4x slot).

- This means you’re not going to see consumer motherboards with multiple 16x slots, so sorry , you can’t just build one AM5 box and put in 4x 5060s. Or if a board offers it, it might be getting shared, eg the 16x GPU runs as 2x 8x slots, or you have slots hanging off the chipset and bottlenecked at 4x back to CPU.

- .. unless you go for server hardware, ie Threadripper, Epyc or Xeon which does have serious amounts of lanes. You can get some good deals on used hardware here, but there’s a spanner in the works with the “good priced” deals having older generations of PCIe 3.0 which limits bandwidth.

- PCIe 3.0,4.0,5.0 – the difference is essentially 2x performance in bandwidth per generation. So it is a pretty big deal for these bandwidth heavy workloads for AI. It’s not such an issue for a single GPU if the workload fits entirely in vRAM but if using multiple GPUs the bandwidth for moving data between them can become a bottleneck.

- As a side note of a side note, this is why most consumer NVMe NASes kinda suck performance wise – they’re not using chips capable of providing that many high performance PCIe lanes. They have other wins, like size, power, noise and reliability, but performance is a problem.

- To get actual max performance on NVMe you will need to use the 16x slot with bifurcation to pack in the M2 NVMe cards , but that then only leaves the PCIe 4x slot for networking.

- And you’ll need to upgrade networking, as 10Gbits is basically nothing vs the speed of NVMe drives when a single drive is doing ~56Gbits. In theory a PCIe 5.0 4x slot can do 128Gbits but I’ve not seen any QSFP+ cards that are PCIe 5.0 and 4x, most seem to be PCIe 3.0 8x cards, it might take a few more years for this hardware to catch up, the server market seems happy enough with 8x cards which is driving this kind of networking gear.

- So the most cost effective top-performance NVMe NAS right now, might be something like an “X99” motherboard with used Xeons and a heap of PCIe 3.0 lanes capable of supporting both NVME storage and networking cards. Or, Apple M4-series hardware using Thunderbolt attached NVMe and Thunderbolt/USB4 networking to the Mac workstations needing access to the storage.

- Whilst I was digging in this subject I basically came to the conclusion that high performance NVMe NASes aren’t worth it right now for consumers. You need power hungry or expensive hardware to run the drives and then you get bottlenecked on network and need to spend a bunch on server grade networking gear. Might as well just get Thunderbolt/USB4 NVMe enclosures and directly connect them to your Mac workstation. Even a Macbook Air with it’s 2x ports can support 1x external screen+power delivery, and 1x NVMe drive concurrently which would meet any editing or other high I/O workflow that you’d conceivable want to run on such a machine.

Rabbit Hole: Other Reading

- https://www.arsturn.com/blog/running-qwen3-coder-30b-at-full-context-memory-requirements-performance-tips – good look at the qwen3-coder hardware requirements and options.

- https://github.com/ggml-org/llama.cpp/discussions/10879 – performance of GPUs when using Vulkan, handy reference if you have an older AMD card and wondering where it stacks