I recently obtained an iPhone and needed to connect it to my VPN. However my existing VPN server was an OpenVPN installation which works lovely on traditional desktop operating systems and Android, but the iOS client is a bit more questionable having last been updated in September 2014 (pre iOS 9).

I decided to look into what the “proper” VPN option would be for iOS in order to get something that should be supported by the OS as smoothly as possible. Last time I looked this was full of wonderful horrors like PPTP (not actually encrypted!!) and L2TP/IPSec (configuration hell), so I had always avoided like the plague.

However as of iOS 9+, Apple has implemented support for IKEv2 VPNs which offers an interesting new option. What particularly made this option attractive for me, is that I can support every device I have with the one VPN standard:

- IKEv2 is built into iOS 9 and MacOS El Capitan.

- IKEv2 is built into Windows 10.

- Works on Android with a third party client (hopeful for native integration soonish?).

- Naturally works on GNU/Linux.

Whilst I love OpenVPN, being able to use the stock OS features instead of a third party client is always nice, particularly on mobile where power management and background tasks behaviour can be interesting.

IKEv2 on mobile also has some other nice features, such as MOBIKE, which makes it very seamless when switching between different networks (like the cellular to WiFi dance we do constantly with phones/tablets). This is something that OpenVPN can’t do – whilst it’s generally fast and reliable at establishing a connection, a change in the network means issuing a reconnect, it doesn’t just move the current connection across.

Given that I run GNU/Linux servers, I went for one of the popular IPSec solutions available on most distributions – StrongSwan.

Unfortunately whilst it’s technical capabilities are excellent, it’s documentation isn’t great. Best way to describe it is that every option is documented, but what options and why you’d want to use them? Not so much. The “left” vs “right” style documentation is also a right pain to work with, it’s not a configuration format that reads nicely and clearly.

Trying to find clear instructions and working examples of configurations for doing IKEv2 with iOS devices was also difficult and there’s some real traps for young players such as generating SHA1 certs instead of SHA2 when using the tools with defaults.

The other fun is that I also wanted my iOS device setup properly to:

- Use certificate based authentication, rather than PSK.

- Only connect to the VPN when outside of my house.

- Remain connected to the VPN even when moving between networks, etc.

I found the best way to make it work, was to use Apple Configurator to generate a .mobileconfig file for my iOS devices that includes all my VPN settings and certificates in an easy-to-import package, but also (critically) allows me to define options that are not selectable to end users, such as on-demand VPN establishment.

After a few nights of messing around and cursing the fact that all the major OS vendors haven’t just implemented OpenVPN, I managed to get a working connection. To avoid others the same pain, I considered writing a guide – but it’s actually a really complex setup, so instead I decided to write a Puppet module (clone from github / or install from puppetforge) which does the following heavy lifting for you:

- Installs StrongSwan (on a Debian/derived GNU/Linux system).

- Configures StrongSwan for IKEv2 roadwarrior style VPNs.

- Generates all the CA, cert and key files for the VPN server.

- Generates each client’s certs for you.

- Generates a .mobileconfig file for iOS devices so you can have a single import of all the configuration, certs and ondemand rules and don’t have to have a Mac to use Apple Configurator.

This means you can save yourself all the heavy lifting and setup a VPN with as little as the following Puppet code:

class { 'roadwarrior': manage_firewall_v4 => true, manage_firewall_v6 => true, vpn_name => 'vpn.example.com', vpn_range_v4 => '10.10.10.0/24', vpn_route_v4 => '192.168.0.0/16', }roadwarrior::client { 'myiphone':ondemand_connect => true, ondemand_ssid_excludes => ['wifihouse'],}roadwarrior::client { 'android': }

The above example sets up a routed VPN using 10.10.10.0/24 as the VPN client range and routes the 192.168.0.0/16 network behind the VPN server back through. (Note that I haven’t added masquerading options yet, so your gateway has to know to route the vpn_range back to the VPN server).

It then defines two clients – “myiphone” and “android”. And in the .mobileconfig file generated for the “myiphone” client, it will specifically generate rules that cause the VPN to maintain a constant connection, except when connected to a WiFi network called “wifihouse”.

The certs and .mobileconfig files are helpfully placed in /etc/ipsec.d/dist/ for your rsync’ing pleasure including a few different formats to help load onto fussy devices.

Hopefully this module is useful to some of you. If you’re new to Puppet but want to take advantage of it, you could always check out my introduction to Puppet with Pupistry guide.

If you’re not sure of my Puppet modules or prefer other config management systems (or *gasp* none at all!) the Puppet module should be fairly readable and easy enough to translate into your own commands to run.

There a few things I still want to do – I haven’t yet done IPv6 configuration (which I’ll fix since I run a dual-stack network everywhere) and I intend to add a masquerade firewall feature for those struggling with routing properly between their VPN and LAN.

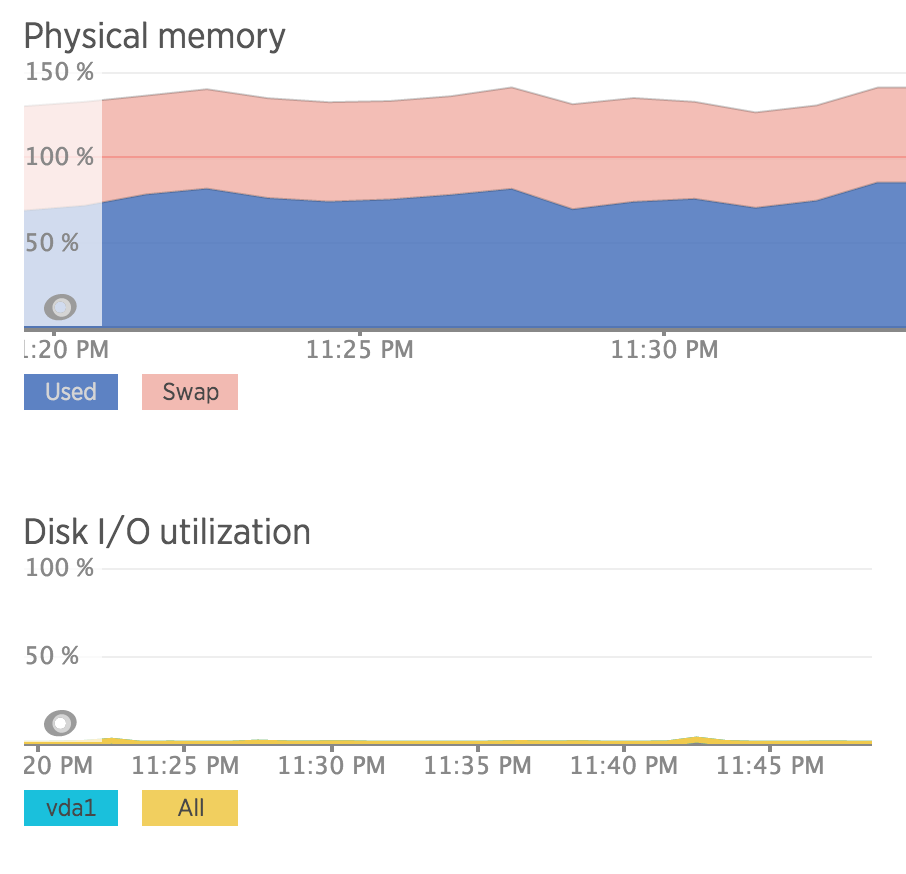

I’ve been using this configuration for a few weeks on a couple iOS 9.3.1 devices and it’s been working beautifully, especially with the ondemand configuration which I haven’t been able to do on any other devices (like Android or MacOS) yet unfortunately. The power consumption overhead seems minimal, but of course your mileage may vary.

It would be good to test with Windows 10 and as many other devices as possible. I don’t intend for this module to support non-roadwarrior type configs (eg site-to-site linking) to keep things simple, but happy to merge any PRs that make it easier to connect more mobile devices or branch routers back to a main VPN host. Also happy to merge PRs for more GNU/Linux distribution support- currently only support Debian/Ubuntu, but it shouldn’t be hard to add others.

If you’re on Android, this VPN will work for you, but you may find the OpenVPN client better and more flexible since the Android client doesn’t have the same level of on demand functionality that iOS has built in. You may also find OpenVPN a better option if you’re regularly using restrictive networks that only allow “HTTPS” out, since it can be run on TCP/443, whereas StrongSwan IKEv2 runs on UDP port 500 or 4500.